This post is inspired by a comment about a recent blog post I shared. While I don't focus on MySQL vs Postgres comparisons, sometimes I share them and in my benchmarks with CPU-bound workloads, and fsync disabled (even more CPU-bound) the general result was that insert throughput was much better with Postgres than MySQL+InnoDB.

The commenter stated that Postgres and MySQL+InnoDB get similar insert rates when the MySQL binlog is disabled. I rarely test such a config because I never ran MySQL in production without the binlog enabled. Regardless, the commenter is a smart person so I decided to run a few more tests.

Some of the configurations I test here are great for performance but lousy for durability so don't take anything here as tuning advice.

tl;dr

- MySQL+InnoDB suffers more tha Postgres from updates that require index maintenance

- For MySQL with the binlog disabled, insert throughput gets close to Postgres results but not many deployments run primaries with the binlog disabled. The gap is much larger, in favor of Postgres, for updates

- When fsyncs are enabled the difference is much larger, in favor of Postgres. One reason is that MySQL must do an fsync for the binlog and InnoDB redo while Postgres only does it for the WAL. But there is more going on here and the fsync impact doesn't explain all of the performance differences.

Configurations tested

I used MySQL 8.0.39 and Postgres 17rc1 both compiled from source. The config files are here.

For MySQL I used 12 configurations:

name binlog fsync InnoDB doublewrite

bl0_sync0_dw0 off off off

bl0_sync0_dw1 off off on

bl0_sync0_dwDO off off DETECT_ONLY

bl0_sync1_dw0 off on off

bl0_sync1_dw1 off on on

bl0_sync1_dwDO off on DETECT_ONLY

bl1_sync0_dw0 on off off

bl1_sync0_dw1 on off on

bl1_sync0_dwDO on off DETECT_ONLY

bl1_sync1_dw0 on on off

bl1_sync1_dw1 on on on

bl1_sync1_dwDO on on DETECT_ONLY

For Postgres I used 12 configurations on the large server and on the small server I used 8 (skipped the 4 that use LZ4 for wal_compression:

name fsync wal_compression full_page_writes

sync0_comp0_fpi0 off off off

sync0_comp0_fpi1 off off on

sync0_comp1_fpi0 off pglz off

sync0_comp1_fpi1 off pglz on

sync0_compLZ4_fpi0 off lz4 off

sync0_compLZ4_fpi1 off lz4 on

sync1_comp0_fpi0 on off off

sync1_comp0_fpi1 on off on

sync1_comp1_fpi0 on pglz off

sync1_comp1_fpi1 on pglz on

sync1_compLZ4_fpi0 on lz4 off

sync1_compLZ4_fpi1 on lz4 on

And a few notes:

- the high durability configuration for MySQL is bl1_sync1_dw1 but that means there will be two fsyncs per commit (one for binlog, one for InnoDB redo)

- the InnoDB doublewrite options (disabled, enable,d DETECT_ONLY) don't impact performance here. They will matter on workloads that are heavier on IO.

- by fsync enabled I mean fsync (or fdatasync) is done for any logs that are enabled (Postgres WAL, InnoDB redo, MySQL binlog) and enabled for InnoDB means innodb_flush_log_at_trx_commit =1 while disabled for InnoDB means =2.

- for Postgres I use wal_sync_method=fdatasync but below I often refer to that as fsync

- for Postgres fsync enabled/disabled is the value for synchronous_commit

Hardware

I used two server types:

- small

- Beelink SER7 7840HS with Ryzen 7 7840HS, 8 AMD cores, AMD SMT disabled, 32G RAM, 1T m.2 SSD for OS, an NVMe device for the database using ext4 and Ubuntu 22.04.

- medium

- A c2d-highcpu-32 instance type on GCP (c2d high-CPU) with 32 vCPU, 64G RAM and SMT disabled so there are 16 cores. It uses Ubuntu 22.04 and storage is ext4 (data=writeback) using SW RAID 0 over 2 locally attached NVMe devices.

I haven't measured it on either the small or medium server but fdatasync latency might not be excellent on the medium server and definitely isn't excellent on the small server.

Benchmark

I used sysbench and my usage is explained here. But I only ran a few of the tests from it rather than the full 40+. The ones for which I report performance are insert, update-index and update-noindex. Note that update-index means that index maintenance is required and update-noindex means that it is not.

For the small server the test is run with 1 thread, 1 table and 50M rows. For the medium server it is run with 12 threads, 8 tables and 10M rows per table.

Results: small server

The columns list the throughput for the insert (ins), update-index (upd-ix) and update-noindex (upd-nix).

When fsync is disabled

- MySQL throughput with the binlog on is (57%, 83%, 54%) of throughput with the binlog off for (insert, update-index, update-noindex) using bl0_sync0_dw1. The result for update-index is the most interesting and that looks like the issue is the low QPS from the test without the binlog.

- The various choices for the InnoDB doublewrite buffer (off, on, DETECT_ONLY) don't have an impact here. They will have an impact on workloads that do more write-back and I am happy that InnoDB now supports DETECT_ONLY.

- MySQL with the binlog off gets (80%, 20%, 62%) of the throughput relative to Postgres using bl0_sync0_dw1 vs sync0_comp0_fpi1. The low value for update-index with MySQL supports the what I write above in the first bullet point.

- MySQL with the binlog on gets (46%, 17%, 33%) of the throughput relative to Postgres using bl0_sync0_dw1 vs sync0_comp0_fpi1. Most MySQL primaries will run with the binlog enabled.

- Using Postgres with full_page_writes=on (recommended) cuts throughput in half for the update tests and by ~30% for the insert test when wal_compression uses pglz. The impact is much less from lz4, so just use lz4.

When fsync is enabled

- It is much harder to spot CPU regressions which I why most of my tests disable it

- MySQL throughput with the binlog on is ~50% relative to the binlog off. This is expected because the bottleneck is fsync and there are 2 fsyncs/write with the binlog on vs 1 fsync/write with it off.

- The various choices for the InnoDB doublewrite buffer (off, on, DETECT_ONLY) don't have an impact here.

- MySQL with the binlog off gets ~27% of the throughput relative to Postgres and for MySQL with the binlog on that drops to ~14% relative to Postgres using bl0_sync0_dw1 vs sync0_comp0_fpi1. These differences are larger than I expect.

- The impact from full_page_writes=on for Postgres is smaller here than it is above, but again the real issue is the CPU overhead from pglz and the solution is to use lz4.

--- without fsync

- no binlog for MySQL

ins upd-ix upd-nix dbms

20413 5662 19837 my8039 bl0_sync0_dw0

20458 5881 20271 my8039 bl0_sync0_dw1

20252 5790 19742 my8039 bl0_sync0_dwDO

- binlog for MySQL

ins upd-ix upd-nix dbms

11814 4935 11051 my8039 bl1_sync0_dw0

11614 4910 10992 my8039 bl1_sync0_dw1

11649 4896 11062 my8039 bl1_sync0_dwDO

- Postgres

ins upd-ix upd-nix dbms

25590 28835 32951 pg17rc1 sync0_comp0_fpi0

25471 28840 32837 pg17rc1 sync0_comp0_fpi1

26452 29196 32702 pg17rc1 sync0_comp1_fpi0

18537 14276 16033 pg17rc1 sync0_comp1_fpi1

--- with fsync

- no binlog for MySQL (1 fsync per commit: InnoDB)

ins upd-ix upd-nix dbms

139 135 142 my8039 bl0_sync1_dw0

140 134 142 my8039 bl0_sync1_dw1

140 134 142 my8039 bl0_sync1_dwDO

- binlog for MySQL (2 fsync per commit: binlog + InnoDB)

ins upd-ix upd-nix dbms

72 72 73 my8039 bl1_sync1_dw0

73 71 71 my8039 bl1_sync1_dw1

73 68 70 my8039 bl1_sync1_dwDO

- Postgres (1 fsync per commit: WAL)

ins upd-ix upd-nix dbms

512 513 516 pg17rc1 sync1_comp0_fpi0

505 507 517 pg17rc1 sync1_comp0_fpi1

512 514 516 pg17rc1 sync1_comp1_fpi0

438 422 387 pg17rc1 sync1_comp1_fpi1

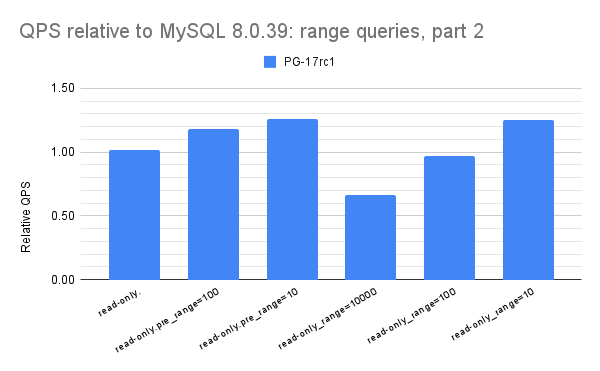

Results: medium server

The columns list the throughput for the insert (ins), update-index (upd-ix) and update-noindex (upd-nix).

When fsync is disabled

- MySQL throughput with the binlog on is (44%, 79%, 43%) of throughput with the binlog off for (insert, update-index, update-noindex) using bl0_sync0_dw1. The result for update-index is the most interesting.

- The various choices for the InnoDB doublewrite buffer (off, on, DETECT_ONLY) don't have an impact here. They will have an impact on workloads that do more write-back and I am happy that InnoDB now supports DETECT_ONLY.

- MySQL with the binlog off gets (96%, 16%, 70%) of the throughput relative to Postgres using bl0_sync0_dw1 vs sync0_comp0_fpi1. Once again the result for update-index with MySQL is odd.

- MySQL with the binlog on gets (42%, 13%, 30%) of the throughput relative to Postgres using bl0_sync0_dw1 vs sync0_comp0_fpi1. Most MySQL primaries will run with the binlog enabled.

- Using Postgres with full_page_writes=on (recommended) reduces throughput by ~5% when wal_compression uses pglz and the solution is lz4.

When fsync is enabled

- MySQL throughput with the binlog on is (34%, 58%, 41%) of throughput with the binlog off for (insert, update-index, update-noindex) using bl0_sync0_dw1. This is (mostly) expected because the bottleneck is fsync and there are 2 fsyncs/write with the binlog on vs 1 fsync/write with it off.

- The various choices for the InnoDB doublewrite buffer (off, on, DETECT_ONLY) don't have an impact here.

- MySQL with the binlog off gets (133%, 68%, 132%) of the throughput relative to Postgres using bl0_sync0_dw1 vs sync0_compLZ4_fpi1.

- MySQL with the binlog on gets (45%, 39%, 54%) of the throughput relative to Postgres using bl0_sync0_dw1 vs sync0_compLZ4_fpi1. Most MySQL primaries will run with the binlog enabled.

- The impact from full_page_writes=on for Postgres is large for updates (reduces throughput by ~50%) and smaller for the insert test because the insert test loads in PK order.

--- without fsync

- no binlog for MySQL

ins upd-ix upd-nix dbms

117078 17620 104215 my8039 bl0_sync0_dw0

118038 19821 103625 my8039 bl0_sync0_dw1

117373 19582 103625 my8039 bl0_sync0_dwDO

- binlog for MySQL

ins upd-ix upd-nix dbms

52602 15201 44727 my8039 bl1_sync0_dw0

52193 15736 44372 my8039 bl1_sync0_dw1

52456 16293 45195 my8039 bl1_sync0_dwDO

- Postgres

ins upd-ix upd-nix dbms

127864 138330 154650 pg17rc1 sync0_comp0_fpi0

123512 123825 148386 pg17rc1 sync0_comp0_fpi1

127456 139634 155127 pg17rc1 sync0_comp1_fpi0

119504 117795 136421 pg17rc1 sync0_comp1_fpi1

127885 139301 154869 pg17rc1 sync0_compLZ4_fpi0

125192 125532 149092 pg17rc1 sync0_compLZ4_fpi1

--- with fsync

- no binlog for MySQL (1 fsync per commit for InnoDB redo)

ins upd-ix upd-nix dbms

20602 6824 15804 my8039 bl0_sync1_dw0

20350 6640 15095 my8039 bl0_sync1_dw1

20677 6836 16134 my8039 bl0_sync1_dwDO

- binlog for MySQL (2 fsync per commit, one for binlog, one for InnoDB redo)

ins upd-ix upd-nix dbms

6972 3863 6238 my8039 bl1_sync1_dw0

6963 3829 6183 my8039 bl1_sync1_dw1

6922 3904 6266 my8039 bl1_sync1_dwDO

- Postgres (1 fsync per commit, for WAL)

ins upd-ix upd-nix dbms

19754 24293 26250 pg17rc1 sync1_comp0_fpi0

15089 9507 10963 pg17rc1 sync1_comp0_fpi1

19732 24335 26280 pg17rc1 sync1_comp1_fpi0

13031 8571 9204 pg17rc1 sync1_comp1_fpi1

19698 24629 26163 pg17rc1 sync1_compLZ4_fpi0

15309 9712 11420 pg17rc1 sync1_compLZ4_fpi1