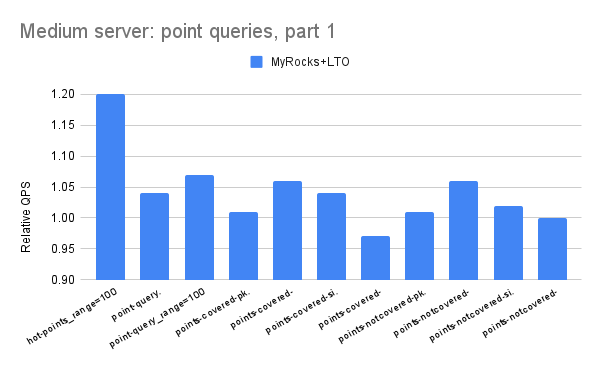

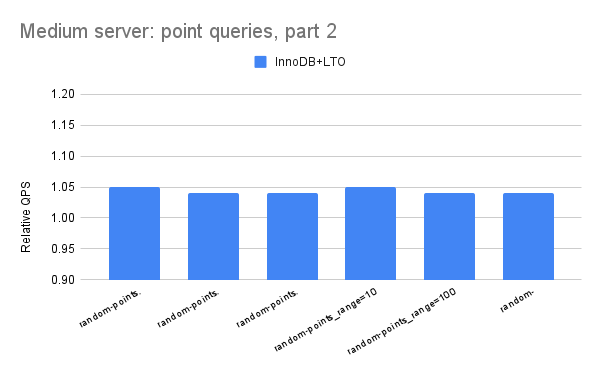

This post has results to show the benefit from using link time optimization for MySQL. That is enabled via the CMake option -DWITH_LTO=ON.

tl;dr

- A typical improvement is ~5% more QPS from link time optimization

- On the small servers (PN53, SER4) the benefit from link-time optimization was larger for InnoDB than for MyRocks. On the medium server (C2D) the benefit was similar for MyRocks and InnoDB.

- SER4 - Beelink SER 4700u (see here) with 8 cores and a Ryzen 7 4700u CPU

- PN53 - ASUS ExpertCenter PN53 (see here) with 8 cores and an AMD Ryzen 7 7735HS CPU

- C2D - a c2d-highcpu-32 instance type on GCP (c2d high-CPU) with 32 vCPU and SMT disabled so there are 16 cores

- SER4, PN53 - 1 thread, 1 table and 30M rows

- C2D - 12 threads, 8 tables and 10M rows per table

- each microbenchmark runs for 300 seconds if read-only and 600 seconds otherwise

- prepared statements were enabled

All of the charts have relative throughput on the y-axis where that is (QPS with LTO) / (QPS without LTO) and with LTO means link-time optimization was enabled. The y-axis doesn't start at 0 to improve readability. When the relative throughput is > 1 then that version of MySQL with link-time optimization is faster.

_%20point%20queries,%20part%201.png)

_%20point%20queries,%20part%201.png)

_%20point%20queries,%20part%202.png)

_%20point%20queries,%20part%202.png)

_%20range%20queries,%20part%201.png)

_%20range%20queries,%20part%201.png)

_%20range%20queries,%20part%202.png)

_%20range%20queries,%20part%202.png)

_%20writes.png)

_%20writes.png)

_%20point%20queries,%20part%201.png)

_%20point%20queries,%20part%201.png)

_%20point%20queries,%20part%202.png)

_%20point%20queries,%20part%202.png)

_%20range%20queries,%20part%201.png)

_%20range%20queries,%20part%201.png)

_%20range%20queries,%20part%202.png)

_%20range%20queries,%20part%202.png)

_%20writes.png)

_%20writes.png)