This is a response to some of the feedback I received from the Postgres community about my recent benchmark results for vector indexes using MariaDB and Postgres (pgvector). The work here isn't sponsored and required ~2 weeks days of server time and a few more hours of my time (yes, I contribute to the PG community).

tl;dr

- index create is ~4X faster when using ~4 parallel workers. I hope that parallel DDL comes to more open source DBMS.

- parallel query does not help pgvector

- increasing work_mem does not help pgvector

- pgvector gets less QPS than MariaDB because it uses more CPU to compute the distance metric

The feedback I received includes

- benchmarks are useless, my production workload is the only relevant workload

- I disagree, but don't expect consensus. But this approach means you are unlikely to do comparative benchmarks because it costs too much to port your workload to some other DBMS just for the sake of a benchmark and only your team can run this so you will miss out on expertise from elsewhere.

- this work was sponsored so I don't trust it

- There isn't much I can to about that.

- this is benchmarketing!

- There is a marketing element to this work. Perhaps I was not the first to define benchmarketing, but by my definition a benchmark report is not benchmarketing when the results are explained. I have been transparent about how I ran the benchmark and shared some performance debugging results here. MariaDB gets more QPS than pgvector because pgvector uses more CPU to compute the distance metric.

- you should try another Postgres extension for vector indexes

- I hope to do that eventually. But pgvector is a great choice here because it implements HNSW and MariaDB implements modified HNSW. Regardless time is finite, the numbers of servers I have is finite and my work here (both sponsored and volunteer) competes with my need to finish my taxes, play video games, sleep, etc.

- this result is bogus because I didn't like some config setting you used

- Claims like this are cheap to make and expensive to debunk. Sometimes the suggested changes make a big difference but that has been rare in my experience. Most of the time the changes at best make a small difference.

- this result is bogus because you used Docker

- I am not a Docker fan, but I only used Docker for Qdrant. I am not a fan because I suspect it is overused and I prefer benchmark setups that don't require it. While the ann-benchmarks page states that Docker is used for all algorithms, it is not used for MariaDB or Postgres in my fork. And it is trivial, but time consuming, to update most of ann-benchmarks to not use Docker.

Claims about the config that I used include:

- huge pages were disabled

- Yes they were. Enabling huge pages would have helped both MariaDB and Postgres. But I prefer to not use them because the feature is painful (unless you like OOM). Posts from me on this are here and here.

- track_io_timing was enabled and is expensive

- There wasn't IO when queries ran as the database was cached so this is irrelevant. There was some write IO when the index was created. While I won't repeat tests with track_io_timing disabled, I am skeptical that the latency of two calls to gettimeofday() per IO are significant on modern Linux.

- autovacuum_vacuum_cost_limit is too high

- I set it to 4000 because I often write write-intensive benchmarks on servers with large IOPs capacities. This is the first time anyone suggested they were too high and experts have reviewed my config files. I wish that Postgres vacuum didn't require so much tuning -- and all of that tuning means that someone can always claim your tuning is wrong. Regardless, the benchmark workload is load, create index, query and I only time the create index and query steps. There is little impact from vacuum on this benchmark. I have also used hundreds of server hours to search for good Postgres configs.

- parallel operations were disabled

- Parallel query isn't needed for the workloads I normally run but parallel index create is nice to have. I disable parallel index create for Postgres because my primary focus is efficiency -- how much CPU and IO is consumed per operation. But I haven't been clear on that in my blog posts. Regardless, below I show there is a big impact from parallel index create and no impact from parallel query.

- work_mem is too low

- I have been using the default which is fine for the workloads I normally run (sysbench, insert benchmark). Below I show there is no impact from increasing it to 8M.

Benchmark

This post has much more detail about my approach in general. I ran the benchmark for 1 session. I use ann-benchmarks via my fork of a fork of a fork at this commit. I used the dbpedia-openai dataset with 1M rows. It uses angular (cosine) for the distance metric. The ann-benchmarks config files is here for Postgres and in this case I only have results for pgvector with halfvec (float16).

I used a large server (Hetzner ax162-s) with 48 cores, 128G of RAM, Ubuntu 22.04 and HW RAID 10 using 2 NVMe devices. I tested three configurations for Postgres and all of the settings are here:

- def

- def stands for default and is the config I used in all of my previous blog posts. Thus, it is the config for which I received feedback.

- wm8

- wm8 stands for work_mem increased to 8MB. The default (used by def) is 4MB.

- pq4

- pq4 stands for Parallel Query with ~4 workers. Here I changed a few settings from def to support that.

Output from the benchmark is here.

The command lines to run the benchmark using my helper scripts are:

bash rall.batch.sh v1 dbpedia-openai-1000k-angular c32r128

Results: QPS vs recall

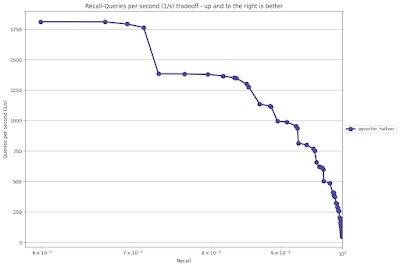

These charts show the best QPS for a given recall. The graphs appears to be the same but the differences are harder to see as recall approaches 1.0 so the next section has a table with numbers.

But from these graphs, QPS doesn't improve with the wm8 or pq4 configs.

The chart for the def config which is what I used in previous blog posts.

The chart for the wm8 config with work_mem=8M

The chart for the pq4 config that uses ~4 parallel workersResults: best QPS for a given recall

Many benchmark results are marketed via peak performance (max throughput or min response time) but these are usually constrained optimization problems -- determine peak performance that satisfies some SLA. And the SLA might be response time or efficiency (cost).

With ann-benchmarks the constraint is recall. Below I share the best QPS for a given recall target along with the configuration parameters (M, ef_construction, ef_search) at which that occurs.

With ann-benchmarks the constraint is recall. Below I share the best QPS for a given recall target along with the configuration parameters (M, ef_construction, ef_search) at which that occurs.

Summary

- pgvector does not get more QPS with parallel query

- pgvector does not get more QPS with a larger value for work_mem

Legend:

- recall, QPS - best QPS at that recall

- isecs - time to create the index in seconds

- m= - value for M when creating the index

- ef_cons= - value for ef_construction when creating the index

- ef_search= - value for ef_search when running queries

Best QPS with recall >= 1.000

no algorithms achived this for the (M, ef_construction) settings I used

Best QPS with recall >= 0.99

recall QPS isecs config

0.990 317.9 7302 PGVector_halfvec(m=48, ef_cons=256, ef_search=40)

0.990 320.4 7285 PGVector_halfvec(m=48, ef_cons=256, ef_search=40)

0.990 316.9 1565 PGVector_halfvec(m=48, ef_cons=256, ef_search=40)

Best QPS with recall >= 0.98

0.983 412.0 4120 PGVector_halfvec(m=32, ef_cons=192, ef_search=40)

0.984 415.6 4168 PGVector_halfvec(m=32, ef_cons=192, ef_search=40)

0.984 411.4 903 PGVector_halfvec(m=32, ef_cons=192, ef_search=40)

Best QPS with recall >= 0.97

0.978 487.3 5070 PGVector_halfvec(m=32, ef_cons=256, ef_search=30)

0.970 508.3 2495 PGVector_halfvec(m=32, ef_cons=96, ef_search=30)

0.970 508.4 2495 PGVector_halfvec(m=32, ef_cons=96, ef_search=30)

Best QPS with recall >= 0.96

0.961 621.1 4120 PGVector_halfvec(m=32, ef_cons=192, ef_search=20)

0.962 632.3 4168 PGVector_halfvec(m=32, ef_cons=192, ef_search=20)

0.962 622.0 903 PGVector_halfvec(m=32, ef_cons=192, ef_search=20)

Best QPS with recall >= 0.95

0.951 768.7 2436 PGVector_halfvec(m=16, ef_cons=192, ef_search=30)

0.952 770.2 2442 PGVector_halfvec(m=16, ef_cons=192, ef_search=30)

0.953 753.0 547 PGVector_halfvec(m=16, ef_cons=192, ef_search=30)

Results: create index

The database configs for Postgres are shared above and parallel index create is disabled by default because my focus has not been on DDL performance. Regardless, it works great for Postgres with pgvector. The summary is:

- index create is ~4X faster when using ~4 parallel workers

- index sizes are similar with and without parallel create index

Sizes: table is ~8G and index is ~4G

Legend

- M - value for M when creating the index

- cons - value for ef_construction when creating the index

- secs - time in seconds to create the index

- size(MB) - index size in MB

def wm8 pq4

M cons secs secs secs

8 32 412 405 108

16 32 649 654 155

8 64 624 627 154

16 64 1029 1029 237

32 64 1901 1895 412

8 96 834 835 194

16 96 1387 1393 312

32 96 2497 2495 541

48 96 3731 3726 798

8 192 1409 1410 316

16 192 2436 2442 547

32 192 4120 4168 903

48 192 6117 6119 1309

64 192 7838 7815 1662

8 256 1767 1752 400

16 256 3146 3148 690

32 256 5070 5083 1102

48 256 7302 7285 1565

64 256 9959 9946 2117

No comments:

Post a Comment