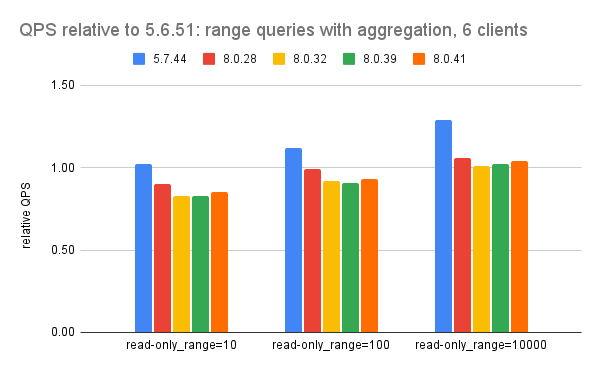

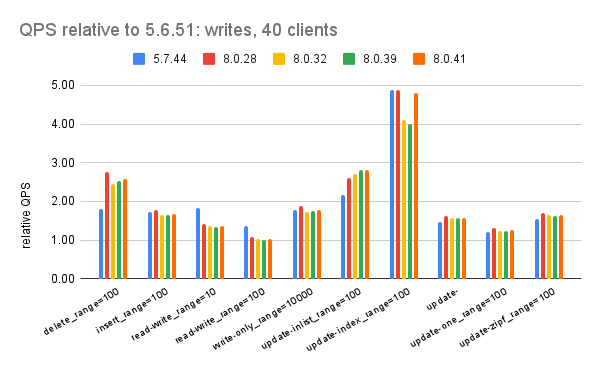

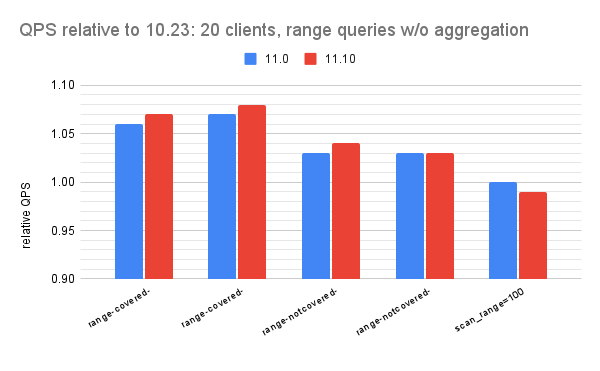

I recently shared two posts (here and here) with results for sysbench on a large server using Postgres versions 10 through 17. In general there were several large improvements over time, but one small regression that arrived in Postgres 11.x. This blog post provides more details on the problem using results from Postgres 10.23, 11.22 and 17.4.

The regression occurs starting in Postgres 11.22 because Postgres is more likely to use bitmap index scan starting in 11.x. I have yet to learn why or how to prevent that.

Index scan vs bitmap index scan

Experts gave me great advice based on a few flamegraphs that I shared. It looked like Postgres started to use bitmap index scan more often starting in Postgres 11. Upstream sysbench does collect query plans for the SQL that it uses, so I modified my fork to do that.

While the explain output helps, it would help even more were there a feature in Postgres to provide optimizer traces, similar to what MySQL has, to understand why some query plans are selected and others are rejected. Another feature request is to improve the official Postgres docs to provide more detail on 1) the difference between index scan and bitmap index scan and 2) the difference between lossy and non-lossy bitmap index scans (AFAIK, one needs recheck).

The problem microbenchmark

The microbenchmarks in question do a range scan with aggregation and have a name like read-only_range=$X where X has been 10, 100 and 1000 but here I use X in 10, 100, 1000, 2000, 4000, 8000, 16000 and 32000. The value of X is the length of the range scan. These are run via oltp_read_only.lua and use these SQL statements.

Build, configuration, hardware, benchmark

These are described in my previous post. But a few things have changed for this report

- I only tested Postgres versions 10.23, 11.22 and 17.4

- I ran the read-only_range=$X microbenchmark for X in 10, 100, 1000, 2000, 4000, 8000, 16000 and 32000. Previously I ran it for X in 10, 100, 10000

- I added new tests that each run only one of the SQL statements used by oltp_read_only.lua. All of the Lua scripts are here.

What didn't change is that the tests are run with 8 tables and 10M rows/table, read-heavy microbenchmarks run for 180 seconds and write-heavy run for 300 seconds.

The benchmark was repeated using configurations with work_mem set to 1MB, 2MB, 4MB, 8MB, 16MB and 32MB. The configuration files

are here.

Query plans

This table shows that plans with bitmap index are far more frequent starting in Postgres 11. The numbers are similar if I count the number of occurrences of recheck.

The following show the number of occurrences of "bitmap index" in explain output for the read-only.range=X microbenchmarks. For read-only.range=1000 and read-only.range=2000 the counts are always 0. Note that the regressions are there at range=8000 and mostly don't occur for other values of range=X. It is interesting that 10.23 is least likely to use plans with bitmap index while 17.4 is most likely.

For read-only.range=4000

dbms 1 2 4 8 16 32 -> work_mem(MB)

10.23 0 0 0 0 0 0

11.22 0 0 0 0 0 0

17.4 723 1020 635 935 1059 1008

For read-only.range=8000

dbms 1 2 4 8 16 32 -> work_mem(MB)

10.23 40 0 1 0 0 166

11.22 1133 1237 1044 1252 1280 1231

17.4 1280 1278 1279 1280 1280 1280

For read-only.range=16000

dbms 1 2 4 8 16 32 -> work_mem(MB)

10.23 1279 1279 1279 1278 1278 1278

11.22 1280 1280 1279 1279 1280 1278

17.4 1279 1280 1279 1279 1279 1279

Example plans for distinct_range

The read-only.range=8000 test uses 4 types of SQL aggregation queries - distinct_range, order_range, simple_range and sum_range. This section has example plans for distinct_range at work_mem=16M.

Below I show that the regressions are largest for sum_range and simple_range and much smaller for distinct_range and order_range -- while plans for all of these are switching from index scan to bitmap index scan.

Postgres 10.23

explain SELECT DISTINCT c FROM sbtest1 WHERE id BETWEEN 4087499 AND 4095498 ORDER BY c

Unique (cost=28211.06..28250.59 rows=7907 width=121)

-> Sort (cost=28211.06..28230.82 rows=7907 width=121)

Sort Key: c

-> Index Scan using sbtest1_pkey on sbtest1 (cost=0.43..27699.12 rows=7907 width=121)

Index Cond: ((id >= 4087499) AND (id <= 4095498))

Postgres 11.22

explain SELECT DISTINCT c FROM sbtest1 WHERE id BETWEEN 1359956 AND 1367955 ORDER BY c

Unique (cost=29781.72..29823.36 rows=8327 width=121)

-> Sort (cost=29781.72..29802.54 rows=8327 width=121)

Sort Key: c

-> Bitmap Heap Scan on sbtest1 (cost=269.79..29239.49 rows=8327 width=121)

Recheck Cond: ((id >= 1359956) AND (id <= 1367955))

-> Bitmap Index Scan on sbtest1_pkey (cost=0.00..267.70 rows=8327 width=0)

Index Cond: ((id >= 1359956) AND (id <= 1367955))

Postgres 17.4

explain SELECT DISTINCT c FROM sbtest1 WHERE id BETWEEN 8646394 AND 8654393 ORDER BY c

Unique (cost=31903.86..31949.03 rows=9033 width=121)

-> Sort (cost=31903.86..31926.45 rows=9033 width=121)

Sort Key: c

-> Bitmap Heap Scan on sbtest1 (cost=193.02..31310.35 rows=9033 width=121)

Recheck Cond: ((id >= 8646394) AND (id <= 8654393))

-> Bitmap Index Scan on sbtest1_pkey (cost=0.00..190.76 rows=9033 width=0)

Index Cond: ((id >= 8646394) AND (id <= 8654393))

Example plans for order_range

The read-only.range=8000 test uses 4 types of SQL aggregation queries - distinct_range, order_range, simple_range and sum_range. This section has example plans for order_range at work_mem=16M.

Below I show that the regressions are largest for sum_range and simple_range and much smaller for distinct_range and order_range -- while plans for all of these are switching from index scan to bitmap index scan.

Postgres 10.23

table 1 : explain SELECT c FROM sbtest1 WHERE id BETWEEN 9271446 AND 9279445 ORDER BY c

Sort (cost=26775.57..26794.32 rows=7501 width=121)

Sort Key: c

-> Index Scan using sbtest1_pkey on sbtest1 (cost=0.43..26292.77 rows=7501 width=121)

Index Cond: ((id >= 9271446) AND (id <= 9279445))

Postgres 11.22

explain SELECT c FROM sbtest1 WHERE id BETWEEN 9375999 AND 9383998 ORDER BY c

Sort (cost=30444.65..30465.97 rows=8529 width=121)

Sort Key: c

-> Bitmap Heap Scan on sbtest1 (cost=275.86..29887.79 rows=8529 width=121)

Recheck Cond: ((id >= 9375999) AND (id <= 9383998))

-> Bitmap Index Scan on sbtest1_pkey (cost=0.00..273.73 rows=8529 width=0)

Index Cond: ((id >= 9375999) AND (id <= 9383998))

Postgres 17.4

explain SELECT c FROM sbtest1 WHERE id BETWEEN 8530681 AND 8538680 ORDER BY c

Sort (cost=27548.18..27567.43 rows=7701 width=121)

Sort Key: c

-> Bitmap Heap Scan on sbtest1 (cost=167.37..27051.05 rows=7701 width=121)

Recheck Cond: ((id >= 8530681) AND (id <= 8538680))

-> Bitmap Index Scan on sbtest1_pkey (cost=0.00..165.44 rows=7701 width=0)

Index Cond: ((id >= 8530681) AND (id <= 8538680))

Example plans for simple_range

The read-only.range=8000 test uses 4 types of SQL aggregation queries - distinct_range, order_range, simple_range and sum_range. This section has example plans for simple_range at work_mem=16M.

Below I show that the regressions are largest for sum_range and simple_range and much smaller for distinct_range and order_range -- while plans for all of these are switching from index scan to bitmap index scan.

Postgres 10.23

explain SELECT c FROM sbtest1 WHERE id BETWEEN 7681343 AND 7689342

Index Scan using sbtest1_pkey on sbtest1 (cost=0.43..28016.13 rows=7999 width=121)

Index Cond: ((id >= 7681343) AND (id <= 7689342))

Postgres 11.22

explain SELECT c FROM sbtest1 WHERE id BETWEEN 1406209 AND 1414208

Bitmap Heap Scan on sbtest1 (cost=250.91..27065.17 rows=7656 width=121)

Recheck Cond: ((id >= 1406209) AND (id <= 1414208))

-> Bitmap Index Scan on sbtest1_pkey (cost=0.00..249.00 rows=7656 width=0)

Index Cond: ((id >= 1406209) AND (id <= 1414208))

Postgres 17.4

explain SELECT c FROM sbtest1 WHERE id BETWEEN 5487727 AND 5495726

Bitmap Heap Scan on sbtest1 (cost=170.27..27961.99 rows=7984 width=121)

Recheck Cond: ((id >= 5487727) AND (id <= 5495726))

-> Bitmap Index Scan on sbtest1_pkey (cost=0.00..168.28 rows=7984 width=0)

Index Cond: ((id >= 5487727) AND (id <= 5495726))

Example plans for sum_range

The read-only.range=8000 test uses 4 types of SQL aggregation queries - distinct_range, order_range, simple_range and sum_range. This section has example plans for sum_range at work_mem=16M.

Below I show that the regressions are largest for sum_range and simple_range and much smaller for distinct_range and order_range -- while plans for all of these are switching from index scan to bitmap index scan.

Postgres 10.23

explain SELECT SUM(k) FROM sbtest1 WHERE id BETWEEN 1117274 AND 1125273

Aggregate (cost=27542.60..27542.61 rows=1 width=8)

-> Index Scan using sbtest1_pkey on sbtest1 (cost=0.43..27522.96 rows=7856 width=4)

Index Cond: ((id >= 1117274) AND (id <= 1125273))

Postgres 11.22

explain SELECT SUM(k) FROM sbtest1 WHERE id BETWEEN 2318912 AND 2326911

Aggregate (cost=28030.44..28030.45 rows=1 width=8)

-> Bitmap Heap Scan on sbtest1 (cost=257.90..28010.57 rows=7948 width=4)

Recheck Cond: ((id >= 2318912) AND (id <= 2326911))

-> Bitmap Index Scan on sbtest1_pkey (cost=0.00..255.92 rows=7948 width=0)

Index Cond: ((id >= 2318912) AND (id <= 2326911))

Postgres 17.4

explain SELECT SUM(k) FROM sbtest1 WHERE id BETWEEN 1795996 AND 1803995

Aggregate (cost=27179.49..27179.50 rows=1 width=8)

-> Bitmap Heap Scan on sbtest1 (cost=167.72..27160.16 rows=7735 width=4)

Recheck Cond: ((id >= 1795996) AND (id <= 1803995))

-> Bitmap Index Scan on sbtest1_pkey (cost=0.00..165.79 rows=7735 width=0)

Index Cond: ((id >= 1795996) AND (id <= 1803995))

Results

While there are normally ~27 microbenchmarks (each microbenchmark uses sysbench to run tests from one Lua file) I added a few extra tests this time and I only share results from the read-only* microbenchmarks. Output from all tests

is here.

The numbers below are the relative QPS which is the following where $version is either 11.22 or 17.4. When the relative QPS is < 1.0, then $version is slower than Postgres 10.23.

(QPS for $version) / (QPS for Postgres 10.23)

A summary of the results is:

- the regression always occurs for the range=8000 microbenchmark and is less likely for other values for range=X. Note that range=X means the queries scan X rows.

- from the four tests that each run only one of the SQL aggregation queries used by the standard read-only microbenchmark (read-only-distinct, read-only-order, read-only-simple and read-only-sum) the regression occurs in read-only-simple and read-only-sum but not in the others and the regression is the largest in read-only-sum. The SQL statements are here for read-only-distinct, read-only-order, read-only-simple and read-only-sum.

work_mem 1 MB

1.03 1.06 read-only_range=10

1.02 1.04 read-only_range=100

1.00 1.00 read-only_range=1000

1.00 1.02 read-only_range=2000

1.00 0.99 read-only_range=4000

0.95 0.95 read-only_range=8000

0.99 1.02 read-only_range=16000

1.00 1.04 read-only_range=32000

0.98 0.97 read-only-distinct_range=8000

0.98 0.99 read-only-order_range=8000

0.94 0.90 read-only-simple_range=8000

0.89 0.83 read-only-sum_range=8000

work_mem 2 MB

11.22 17.4

1.02 1.06 read-only_range=10

1.01 1.02 read-only_range=100

1.00 0.99 read-only_range=1000

0.99 1.01 read-only_range=2000

0.98 0.96 read-only_range=4000

0.94 0.93 read-only_range=8000

0.99 1.00 read-only_range=16000

0.98 1.02 read-only_range=32000

0.97 0.96 read-only-distinct_range=8000

0.96 0.98 read-only-order_range=8000

0.92 0.89 read-only-simple_range=8000

0.86 0.80 read-only-sum_range=8000

work_mem 4 MB

11.22 17.4

1.02 1.06 read-only_range=10

1.02 1.03 read-only_range=100

1.01 1.01 read-only_range=1000

1.00 1.02 read-only_range=2000

1.00 1.00 read-only_range=4000

0.96 0.94 read-only_range=8000

1.13 1.24 read-only_range=16000

1.06 1.11 read-only_range=32000

0.98 0.97 read-only-distinct_range=8000

0.98 0.99 read-only-order_range=8000

0.94 0.90 read-only-simple_range=8000

0.89 0.82 read-only-sum_range=8000

work_mem 8 MB

11.22 17.4

1.03 1.07 read-only_range=10

1.02 1.03 read-only_range=100

1.00 0.99 read-only_range=1000

1.00 1.01 read-only_range=2000

0.99 0.97 read-only_range=4000

0.95 0.94 read-only_range=8000

0.99 1.00 read-only_range=16000

0.99 1.03 read-only_range=32000

0.97 0.96 read-only-distinct_range=8000

0.97 0.98 read-only-order_range=8000

0.92 0.89 read-only-simple_range=8000

0.87 0.81 read-only-sum_range=8000

work_mem 16 MB

11.22 17.4

1.04 1.08 read-only_range=10

1.03 1.05 read-only_range=100

1.00 1.00 read-only_range=1000

1.00 1.02 read-only_range=2000

0.99 0.97 read-only_range=4000

0.94 0.94 read-only_range=8000

0.98 1.00 read-only_range=16000

0.99 1.03 read-only_range=32000

0.97 0.96 read-only-distinct_range=8000

0.97 0.99 read-only-order_range=8000

0.92 0.90 read-only-simple_range=8000

0.86 0.80 read-only-sum_range=8000

work_mem 32 MB

11.22 17.4

1.02 1.06 read-only_range=10

1.01 1.03 read-only_range=100

1.00 1.00 read-only_range=1000

0.99 1.02 read-only_range=2000

1.00 0.97 read-only_range=4000

0.95 0.94 read-only_range=8000

0.99 1.01 read-only_range=16000

0.99 1.04 read-only_range=32000

0.97 0.96 read-only-distinct_range=8000

0.97 0.99 read-only-order_range=8000

0.94 0.90 read-only-simple_range=8000

0.89 0.83 read-only-sum_range=8000