Monday, June 29, 2015

Examining performance for MongoDB and the insert benchmark

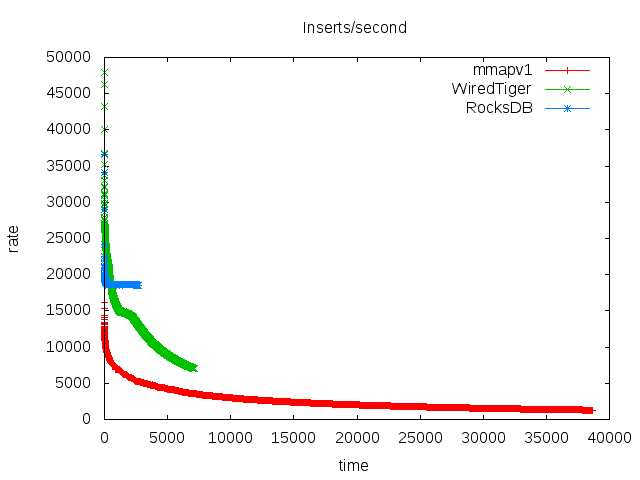

My previous post has results for the insert benchmark when the database fits in RAM. In this post I look at MongoDB performance as the database gets larger than RAM. I ran these tests while preparing for a talk and going on vacation, so I am vague on some of the configuration details. The summary is that RocksDB does much better than mmapv1 and the WiredTiger B-Tree when the database is larger than RAM because it is more IO efficient. RocksDB doesn't read index pages during non-unique secondary index maintenance. It also does fewer but larger writes rather than the many smaller/random writes required by a B-Tree. This is more of a benefit for servers that use disk.

Insert benchmark for MongoDB, memory allocators and the oplog

I used the insert benchmark to measure performance with concurrency for WiredTiger and a cached database. My goals were to understand the impact of concurrency, the oplog, the memory allocator and transaction size. The test was run for 1 to 32 concurrent connections using both tcmalloc and jemalloc with the oplog enabled and disabled and for several document sizes. My conclusions from this include:

- WiredTiger gets almost 12X more QPS from 1 to 20 concurrent clients with the oplog off and almost 9X more QPS with the oplog on. I think this is excellent for a young engine on this workload.

- Document size affects performance. The test has a configurable padding field and the insert rate at 32 connections was 247,651 documents/second with a 16-byte pad field and 140,142 documents/second with a 1024-byte pad field.

- Bundled tcmalloc gets 1% to 6% more QPS than jemalloc 3.6.0

- The insert rate drops by more than half when the oplog is enabled. I expect this to be less of an issue soon.

Configuration

Tests were repeated for 1, 2, 4, 8, 12, 16, 20, 24, 28 and 32 concurrent connections. The test server has 40 hyperthread cores and 144G of RAM. I use a special MongoDB 3.0 branch and compile binaries. This test uses one collection with 3 secondary indexes. All clients insert data into that collection. The _id column is set by the driver. The fields on which secondary indexes are created are inserted in random order. There is the potential for extra contention (data & mutexes) because only one collection is used. I set two options for WiredTiger and otherwise the defaults were used:storage.wiredTiger.collectionConfig.blockCompressor: snappy

storage.wiredTiger.engineConfig.journalCompressor: none

Results

This lists the insert rate for all of the configurations tested. The test names are encoded to include the engine name ("wt" is WiredTiger), padding size ("sz16" is 16 bytes), memory allocator ("jem" is jemalloc & "tcm" is tcmalloc) and whether the oplog is enabled ("op0" is off, "op1" is on). Results are missing for one of the configurations at 24 concurrent clients.

1 2 4 8 12 16 20 24 28 32 concurrency

17793 32143 60150 109853 154377 186886 209831 225879 235803 234158 wt.sz16.jem.op0

14758 26908 48186 69910 91761 109643 120413 129153 134313 140633 wt.sz16.jem.op1

18575 34482 63169 114752 160454 196784 218009 230588 244001 247651 wt.sz16.tcm.op0

15756 28461 50069 72988 101615 109223 127287 135525 133446 137377 wt.sz16.tcm.op1

17651 31466 58192 107472 152426 184849 205226 227172 226825 wt.sz64.jem.op0

14481 26565 47385 71059 87135 100684 110569 110066 119478 120851 wt.sz64.jem.op1

19094 33401 61426 111606 153950 190107 214670 227425 238072 239836 wt.sz64.tcm.op0

15399 27946 49392 72892 85185 99140 106172 101163 112812 119032 wt.sz64.tcm.op1

15759 29196 55124 98027 135320 161829 181049 197465 208484 211100 wt.sz256.jem.op0

13163 24092 41017 62878 71153 84155 87487 90366 91495 87025 wt.sz256.jem.op1

17299 30631 55900 101538 137529 165326 187330 200574 216888 217589 wt.sz256.tcm.op0

13927 25822 43428 60078 72195 78141 76053 73169 74824 64537 wt.sz256.tcm.op1

12115 22366 40793 71936 93701 109068 120175 129645 133238 141108 wt.sz1024.jem.op0

9938 17268 24985 31944 34127 38119 39196 38747 36796 38167 wt.sz1024.jem.op1

12933 23547 42426 73295 94123 110412 116003 136287 139914 140142 wt.sz1024.tcm.op0

10422 17701 23747 30276 29959 32444 32610 30839 31569 30089 wt.sz1024.tcm.op1

Memory allocator

This shows the ratio of the insert rate with tcmalloc vs jemalloc for 4 configurations. When the rate is greater than 1 then tcmalloc is faster. The oplog was enabled for these tests. Results are displayed for 4 configurations -- 16, 64, 256 and 1024 byte padding. For all results but one the insert rate was better with tcmalloc and the difference was more significant when the padding was smaller.Oplog off

This shows the insert rate for 4 different configurations with padding of 16, 64, 256 and 1024 bytes. All configurations used tcmalloc and the oplog was disabled. The insert rate increases with concurrency and was better with a smaller padding size.Oplog on

This shows the insert rate for 4 different configurations with padding of 16, 64, 256 and 1024 bytes. All configurations used tcmalloc and the oplog was enabled. The insert rate usually increases with concurrency and was better with a smaller padding size. For padding sizes of 256 and 1024 the insert rate decreased at high concurrency.Oplog impact

This displays the ratio of the insert rates from the previous two sections. The ratio is the insert rate with the oplog on versus off. This rate should be less than one but not too much less. The overhead of the oplog increases with the padding size. In the worst case the oplog reduces the insert rate by 5X (about 0.20 below). I expect that this overhead will be greatly reduced for WiredTiger and RocksDB in future releases.Tuesday, June 9, 2015

RocksDB & ForestDB via the ForestDB benchmark: cached database

For this test I use a database smaller than RAM so it should remain cached even after space-amplification occurs. Tests were repeated with both a disk array and SSD as the database still needs to be made durable and some engines do more random IO for that. Tests were also run for N=10 and N=20 where that is the number of threads to use for the tests with concurrent readers or writers. The test server has 24 hyperthread cores. All tests used a database with 56M documents and ~100G block cache. All tests also set the ForestDB compaction threshold to 25%.

Disk array, 10 threads

This configuration used a disk array and 10 user threads (N=10) for the concurrency tests. Unlike the IO-bound/disk test the load here was faster for RocksDB. Had more documents been inserted eventually ForestDB would be faster.

RocksDB continues to be faster on the write-only tests (ows.1, ows.n, owa.1, owa.n). I did not spend much time trying to explain the difference.

For the point query test ForestDB is faster at 1 thread but much slower at 10 threads. I think the problem is mutex contention on the block cache. I present stack traces at the end of the post to explain this. For the range query tests RocksDB is always faster because ForestDB has to do more work to get the data and RocksDB benefits from a clustered index.

The command lines are below. The config used 8 files for ForestDB.

SSD, 10 threads

This configuration used an SSD and 10 user threads (N=10) for the concurrency tests. The results are similar to the results above for the disk array with a few exceptions. RocksDB does worse on the load and write-only tests because the disk array has more IO throughput.

The command lines are below. The config used 8 files for ForestDB.

SSD, 20 threads

This configuration used an SSD and 20 user threads (N=20) for the concurrency tests. RocksDB makes better use of the extra concurrency in the workload. In some cases throughput for ForestDB was already limited by mutex contention with N=10 and did not improve here.

The command lines are below. The config used 8 files for ForestDB.

Disk array, 10 threads

This configuration used a disk array and 10 user threads (N=10) for the concurrency tests. Unlike the IO-bound/disk test the load here was faster for RocksDB. Had more documents been inserted eventually ForestDB would be faster.

RocksDB continues to be faster on the write-only tests (ows.1, ows.n, owa.1, owa.n). I did not spend much time trying to explain the difference.

For the point query test ForestDB is faster at 1 thread but much slower at 10 threads. I think the problem is mutex contention on the block cache. I present stack traces at the end of the post to explain this. For the range query tests RocksDB is always faster because ForestDB has to do more work to get the data and RocksDB benefits from a clustered index.

operations/second for each step

RocksDB ForestDB

load 133336 86453

ows.1 4649 2623

ows.n 11479 8339

pqw.1 63387 102204

pqw.n 531653 397048

rqw.1 503364 24404

rqw.n 4062860 205627

pq.1 99748 117481

pq.n 829935 458360

rq.1 774101 292723

rq.n 5640859 1060490

owa.1 75059 28082

owa.n 73922 27092

The command lines are below. The config used 8 files for ForestDB.

bash rall.sh 56000000 log /disk 102400 64 10 600 3600 1000 1 rocksdb 20 no 1

bash rall.sh 56000000 log /disk 102400 64 10 600 3600 1000 1 fdb 20 no 8

This configuration used an SSD and 10 user threads (N=10) for the concurrency tests. The results are similar to the results above for the disk array with a few exceptions. RocksDB does worse on the load and write-only tests because the disk array has more IO throughput.

operations/second for each step

RocksDB ForestDB

load 46895 86328

ows.1 2899 2131

ows.n 10054 6665

pqw.1 63371 102881

pqw.n 525750 389205

rqw.1 515309 23648

rqw.n 4032487 203822

pq.1 99894 115806

pq.n 819258 454507

rq.1 756546 294490

rq.n 5708140 1074295

owa.1 30469 22305

owa.n 29563 20671

bash rall.sh 56000000 log /ssd1 102400 64 10 600 3600 1000 1 rocksdb 20 no 1

bash rall.sh 56000000 log /ssd1 102400 64 10 600 3600 1000 1 fdb 20 no 8

This configuration used an SSD and 20 user threads (N=20) for the concurrency tests. RocksDB makes better use of the extra concurrency in the workload. In some cases throughput for ForestDB was already limited by mutex contention with N=10 and did not improve here.

operations/second for each step

RocksDB ForestDB

load 46357 85053

ows.1 2987 2082

ows.n 13595 7263

pqw.1 62684 102648

pqw.n 708154 354919

rqw.1 510009 24122

rqw.n 5958109 253565

pq.1 100403 117666

pq.n 1227031 387373

rq.1 761143 294078

rq.n 8337013 1013277

owa.1 30487 22219

owa.n 28972 21487

The command lines are below. The config used 8 files for ForestDB.

bash rall.sh 56000000 log /ssd1 102400 64 20 600 3600 1000 1 rocksdb 20 no 1

bash rall.sh 56000000 log /ssd1 102400 64 20 600 3600 1000 1 fdb 20 no 8

Stack traces and nits

I used PMP to get stack traces to explain performance for some tests. I have traces mutex contention and one other problem. I ended up reproducing one problem by reloading 1B docs into a database.

This thread stack shows mutex contention while creating iterators for range queries. This stack trace was common during the range query tests.

This thread stack shows mutex contention on the block cache during range queries. I am not certain, but I think this was from the point query tests.

This has 3 stack traces to show the stall on the commit code path where disk reads are done. That was a problem for ows.1, ows.n, owa.1 and owa.n.

This has 3 stack traces to show the stall on the commit code path where disk reads are done. That was a problem for ows.1, ows.n, owa.1 and owa.n.

Monday, June 8, 2015

RocksDB & ForestDB via the ForestDB benchmark: IO-bound and SSD

This test is similar to the previous result except the database was on an SSD device rather than disk. The SSD is a 400G Intel s3700 with 373G of usable space. The device can do more random IO and less sequential IO than the disk array. I ran an extra process to take 82G of RAM via mlock from the 144G RAM server to make it easier to have a database larger than RAM but fit on the SSD.

The test used a database with 600M documents. I reduced the compaction threshold for ForestDB from 50% to 25% to reduce the worst case space-amplification from 2 to 4/3 and get more data into the test database. This change isn't reflected in the configuration template I published in github. RocksDB was configured with an 8G block cache versus 16G for ForestDB. Otherwise the configuration was similar to the IO-bound/disk test.

Results

The difference in load performance is much wider here than on the disk array. I assume that write-amplification was the problem for RocksDB.

The difference in ows.1 and ows.n here is smaller than on the disk array. If ForestDB is doing random disk reads on the commit code path than the impact is much less for SSD because disk read latency is smaller. But RocksDB is still much faster for ows.1, ows.n, owa.1 and owa.n.

RocksDB continues to be faster for the point query tests (pqw.1, pqw.n, pq.1, pq.n). The difference is larger for the single threaded tests and I assume that ForestDB continues to do more disk reads per query. RocksDB is still much faster on the range query tests as explained in the previous post.

Unlike the test with the disk-array, the ForestDB tests with 1 writer thread were able to sustain 1000 writes/second as configured via the rate limit.

The test used a database with 600M documents. I reduced the compaction threshold for ForestDB from 50% to 25% to reduce the worst case space-amplification from 2 to 4/3 and get more data into the test database. This change isn't reflected in the configuration template I published in github. RocksDB was configured with an 8G block cache versus 16G for ForestDB. Otherwise the configuration was similar to the IO-bound/disk test.

Results

The difference in load performance is much wider here than on the disk array. I assume that write-amplification was the problem for RocksDB.

The difference in ows.1 and ows.n here is smaller than on the disk array. If ForestDB is doing random disk reads on the commit code path than the impact is much less for SSD because disk read latency is smaller. But RocksDB is still much faster for ows.1, ows.n, owa.1 and owa.n.

RocksDB continues to be faster for the point query tests (pqw.1, pqw.n, pq.1, pq.n). The difference is larger for the single threaded tests and I assume that ForestDB continues to do more disk reads per query. RocksDB is still much faster on the range query tests as explained in the previous post.

Unlike the test with the disk-array, the ForestDB tests with 1 writer thread were able to sustain 1000 writes/second as configured via the rate limit.

operations/second for each step

RocksDB ForestDB

load 24540 81297

ows.1 3616 1387

ows.n 10727 2029

pqw.1 3601 1805

pqw.n 22448 14069

rqw.1 30477 1419

rqw.n 214060 13134

pq.1 3969 2878

pq.n 24562 19133

rq.1 30621 3805

rq.n 230673 23009

owa.1 24742 1967

owa.n 22692 2319

I had to repeat this test several times to find good values for the number of documents in the database and the compaction threshold for ForestDB. I was using 50% at first for the threshold and the database was doubling in size. That doubling, 2X space amplification, is expected with the threshold set to 50% so I reduced it to 25% which should have a worst case space amp of 4/3.

Unfortunately, with 64 database files and one compaction thread the worst case space amplification can be worse than theory predicts. All database files can trigger compaction at the same point in time, but only one will be compacted at a time by the one compaction thread. So others will get much more dead data than configured by the threshold.

I expect ForestDB to do much better when it supports concurrent compaction threads. The results below show the database size per test. There is more variance in the database size with ForestDB especially during the ows.1 and ows.n tests. This can make it harder to most of the available space on a storage device.

Unfortunately, with 64 database files and one compaction thread the worst case space amplification can be worse than theory predicts. All database files can trigger compaction at the same point in time, but only one will be compacted at a time by the one compaction thread. So others will get much more dead data than configured by the threshold.

I expect ForestDB to do much better when it supports concurrent compaction threads. The results below show the database size per test. There is more variance in the database size with ForestDB especially during the ows.1 and ows.n tests. This can make it harder to most of the available space on a storage device.

Size in GB after each step

RocksDB ForestDB

load 151 228

ows.1 149 340

ows.n 155 353

pqw.1 155 316

pqw.n 155 290

rqw.1 156 262

rqw.n 156 277

pq.1 156 276

pq.n 156 277

rq.1 156 277

rq.n 156 277

owa.1 166 282

owa.n 177 288

Command lines

Command lines for the tests are:

bash rall.sh 600000000 log /ssd1 8192 64 10 600 3600 1000 1 rocksdb 20 no 1

bash rall.sh 600000000 log /ssd1 16384 64 10 600 3600 1000 1 fdb 20 no 64

RocksDB & ForestDB via the ForestDB benchmark: IO-bound and disks

This has performance results for RocksDB and ForestDB using the ForestDB benchmark. The focus for this test is an IO-bound workload with a disk array. The database is about 3X larger than RAM. The server has 24 hyperthread cores, 144G of RAM and 6 disks (10k RPM SAS) using HW RAID 0. Background reading is in a previous post.

While RocksDB does much better in the results here I worked on this to understand differences in performance rather than to claim that RocksDB is superior. Hopefully the results here will help make ForestDB better.

Test setup

The test pattern was described in the previous post. Here I use shorter names for each of the tests:

While RocksDB does much better in the results here I worked on this to understand differences in performance rather than to claim that RocksDB is superior. Hopefully the results here will help make ForestDB better.

Test setup

The test pattern was described in the previous post. Here I use shorter names for each of the tests:

- load - Load

- ows.1 - Overwrite-sync-1

- ows.n - Overwrite-sync-N

- pqw.1 - Point-query-1-with-writer

- pqw.n - Point-query-N-with-writer

- rqw.1 - Range-query-1-with-writer

- rqw.n - Range-query-N-with-writer

- pq.1 - Point-query-1

- pq.n - Point-query-N

- rq.1 - Range-query-1

- rq.n - Range-query-N

- owa.1 - Overwrite-async-1

- owa.n - Overwrite-async-N

I used these command lines with my fork of the ForestDB benchmark:

The common options include:

I looked at write-amplification for the ows.1 test. I measured the average rates for throughput and write-KB/second from iostat and divide the IO rate by the throughput as write-KB/update. The IO write-rate per update is about 2X higher with RocksDB.

bash rall.sh 2000000000 log data 32768 64 10 600 3600 1000 1 rocksdb 20 no 1

bash rall.sh 2000000000 log data 32768 64 10 600 3600 1000 1 fdb 20 no 64

The common options include:

- load 2B documents

- use 32G for the database cache. The server has 144G of RAM.

- use N=10 for the tests with concurrency

- use a 600 second warmup and then run for 3600 seconds

- limit the writer thread to 1000/second for the with-writer tests

- range queries fetch ~20 documents

- do not use periodic_commit for the load

- use a 64M write buffer for all tests

- use one LSM tree

The ForestDB specific options include:

- use 64 database files to reduce the max file size. This was done to give compaction a better chance of keeping up and to avoid temporarily doubling the size of the database during compaction.

The first result is the average throughput during the test as the operations/second rate. I have written previously about benchmarketing vs benchmarking and average throughput leaves out the interesting bits like response time variance. Alas, my time to write this is limited too.

ForestDB is slightly faster for the load. Even with rate limiting RocksDB incurs too much IO debt during this load. I don't show it here but the compaction scores for levels 0, 1 and 2 in the LSM were higher than expected given the rate limits I used. We have work-in-progress to fix that.

For the write-only tests (ows.1, ows.n, owa.1, owa.n) RocksDB is much faster than ForestDB. From the rates below it looks like ForestDB might be doing a disk read per write because I can get ~200 disk reads / second from 1 thread. I collected stack traces from other tests that showed disk reads in the commit code path so I think that is the problem here. I will share the stack traces in a future post.

For the write-only tests (ows.1, ows.n, owa.1, owa.n) RocksDB is much faster than ForestDB. From the rates below it looks like ForestDB might be doing a disk read per write because I can get ~200 disk reads / second from 1 thread. I collected stack traces from other tests that showed disk reads in the commit code path so I think that is the problem here. I will share the stack traces in a future post.

RocksDB does much better on the range query tests (rqw.1, rqw.n, rq.1, rq.n). With ForestDB data for adjacent keys is unlikely to be adjacent in the database file unless it was loaded in that order and not updated after the load. So range queries might do 1 disk seek per document. With RocksDB we can assume that all data was in cache except for the max level of the LSM. And for the max level data for adjacent keys is adjacent in the file. So RocksDB is unlikely to do more than 1 disk seek per short range scan.

I don't have a good explanation for the ~2X different in point query QPS (pqw.1, pqw.n, pq.1, pq.n). The database is smaller with RocksDB, but not small enough to explain this. For pq.1, the single-threaded point-query test, both RocksDB and ForestDB were doing ~184 disk reads/second with similar latency of ~5ms/read. So ForestDB was doing almost 2X more disk reads / query. I don't understand ForestDB file structures well enough to explain that.

It is important to distinguish between logical and physical IO when trying to explain RocksDB IO performance. Logical IO means that a file read is done but the data is in the RocksDB block cache or OS cache. Physical IO means that a file read is one and the data is not in cache. For this configuration all levels before the max level of the LSM are in cache for RocksDB and some of the max level is in cache as the max level has 90% of the data.

For the tests that used 1 writer thread limited to 1000 writes/second RocksDB was able to sustain that rate. For ForestDB the writer thread only did ~200 writes/second.

I don't have a good explanation for the ~2X different in point query QPS (pqw.1, pqw.n, pq.1, pq.n). The database is smaller with RocksDB, but not small enough to explain this. For pq.1, the single-threaded point-query test, both RocksDB and ForestDB were doing ~184 disk reads/second with similar latency of ~5ms/read. So ForestDB was doing almost 2X more disk reads / query. I don't understand ForestDB file structures well enough to explain that.

It is important to distinguish between logical and physical IO when trying to explain RocksDB IO performance. Logical IO means that a file read is done but the data is in the RocksDB block cache or OS cache. Physical IO means that a file read is one and the data is not in cache. For this configuration all levels before the max level of the LSM are in cache for RocksDB and some of the max level is in cache as the max level has 90% of the data.

For the tests that used 1 writer thread limited to 1000 writes/second RocksDB was able to sustain that rate. For ForestDB the writer thread only did ~200 writes/second.

operations/second for each step

RocksDB ForestDB

load 58137 69579

ows.1 4251 289

ows.n 11836 295

pqw.1 232 123

pqw.n 1228 654

rqw.1 3274 48

rqw.n 17770 377

pq.1 223 120

pq.n 1244 678

rq.1 2685 206

rq.n 16232 983

owa.1 56846 149

owa.n 49078 224

I looked at write-amplification for the ows.1 test. I measured the average rates for throughput and write-KB/second from iostat and divide the IO rate by the throughput as write-KB/update. The IO write-rate per update is about 2X higher with RocksDB.

throughput write-KB/s write-KB/update

RocksDB 4252 189218 44.5

ForestDB 289 6099 21.1

The next result is the size of the database at the end of each test step. Both were stable for most tests but RocksDB had trouble with the owa.1 and owa.n tests. These tests used threshold=50 for ForestDB which allows for up to 2X space amplification per database file. There were 64 database files. But we don't see 2X growth in this configuration.

Size in GB after each step

RocksDB ForestDB

load 498 776

ows.1 492 768

ows.n 500 810

pqw.1 501 832

pqw.n 502 832

rqw.1 502 832

rqw.n 503 832

pq.1 503 832

pq.n 503 832

rq.1 503 832

rq.n 503 832

owa.1 529 832

owa.n 560 832

RocksDB & ForestDB via the ForestDB benchmark, part 1

ForestDB was announced with some fanfare last year including the claim that it was faster than RocksDB. I take comparisons to RocksDB as a compliment, and then get back to work. This year I met the author of the ForestDB algorithm. He is smart and there are more talented people on the ForestDB effort. It would be great to see a MongoDB engine for ForestDB.

ForestDB

I used the ForestDB benchmark to evaluate RocksDB and ForestDB. Results from the tests will be shared in future posts. Here I describe my experience running the tests. I forked the ForestDB benchmark as I made general and RocksDB-specific improvements to it.

ForestDB is tree-structured for the index and log-structured for the data. Everything is copy-on-write so there is one database and no log. This is kind of like Bitcask except Bitcask is hash structured for the index and the index isn't persistent. The write-amplification vs space-amplification tradeoff is configurable via the threshold parameter that sets the percentage of dead data allowed in the database file before the compaction thread will copy out live data into a new file. For a uniform distribution of writes to keys, space-amp is 2 and write-amp is 2 with threshold=50%. Reducing threshold to 25% means that space-amp is 4/3 and write-amp is 4. With leveled compaction in RocksDB we usually get higher write-amplification and lower space-amplification compared to ForestDB.

The ForestDB database can be sharded into many files per process. I am not sure if transactions can span files. There is only one compaction thread regardless of the number of files and during my tests it was easy for compaction to fall behind. Single-threaded compaction might be stalled by file reads, decompression after file reads and compression before file writes. I think that for every key read during compaction, the index must be checked to confirm the key is live, so this can be another reason for compaction to fall behind. I assume work can be done to make this better.

ForestDB benchmark client

I forked the ForestDB benchmark client. When I get more time I will try to push changes upstream. Here I describe changes I made to it using the git commit log:

The script rall.sh runs tests using a pattern that is interesting to me. The pattern is listed below. The first step loads N documents and this takes a variable amount of time. The steps that follow run for a fixed amount of time. All of the tests used uniform random RNG to generate keys. The script also uses "numactl --interleave=all" given that I was using dual socket servers and a lot of RAM in many cases. The script also collects iostat and vmstat to help explain some performance differences.

I started with an IO-bound configuration and data on disk and ForestDB was getting about 1.5X more QPS than RocksDB for a read-only point-query workload. I had two servers with identical hardware and this result was reproduced on both servers. So it was time to debug. The test used one reader thread, so this result wasn't ruined by using the same RNG seed for all threads.

I started by confirming that the ratio of disk reads per query were similar for RocksDB and ForestDB. Each did about 1.5 queries per disk read courtesy of cached data. However the average disk read latency reported by iostat was about 1.5X larger for RocksDB. Why was the disk faster for ForestDB?

Next I used strace to see the system calls to read blocks. I learned that page reads for data were 4kb and aligned with ForestDB. They were usually less than 4kb and not aligned with RocksDB. Both use buffered IO. From iostat the average read size was 4kb for ForestDB and about 8kb for RocksDB. Many of the unaligned almost 4kb reads crossed alignment boundaries so the unaligned ~4kb read required an 8kb read from disk. This could be a performance problem on a fast SSD but there is not much difference between 4kb and 8kb reads from a disk array and I re-confirmed that via tests with fio. I also used blktrace to get more details about the IO pattern and learned that my servers were using the CFQ IO scheduler. I switched to deadline.

At this point I am stuck. Somehow ForestDB is getting faster reads from disk or the read pattern wasn't as random as I thought it would be. So I read more of the benchmark client and saw that point reads were done in a batch where the key for the first document per batch was selected at random (call it N) and then the keys for the rest of the batch were N+1, N+2, N+3, etc. This gives ForestDB an advantage when only one database file is used because with M files the reads in one batch might use different files. The load and query code both share a function that converts an integer to a key, so N here is an integer and the key is something that includes a hash of the integer. This means that the key for N=3 is not adjacent to the key for N=4. However, the data file for ForestDB is log structured and immediately after the load the data for N=3 is adjacent to the data for N=4 unless updates were done after the load to either of the documents.

At last I understood the cause. ForestDB was benefiting from fetching data that was adjacent in the file. The benefit came from caching either in the HW RAID device or disks. I changed the test configuration to use batchsize=1 for point queries and then ForestDB and RocksDB began to experience the same average read latency from the disk array.

ForestDB

I used the ForestDB benchmark to evaluate RocksDB and ForestDB. Results from the tests will be shared in future posts. Here I describe my experience running the tests. I forked the ForestDB benchmark as I made general and RocksDB-specific improvements to it.

ForestDB is tree-structured for the index and log-structured for the data. Everything is copy-on-write so there is one database and no log. This is kind of like Bitcask except Bitcask is hash structured for the index and the index isn't persistent. The write-amplification vs space-amplification tradeoff is configurable via the threshold parameter that sets the percentage of dead data allowed in the database file before the compaction thread will copy out live data into a new file. For a uniform distribution of writes to keys, space-amp is 2 and write-amp is 2 with threshold=50%. Reducing threshold to 25% means that space-amp is 4/3 and write-amp is 4. With leveled compaction in RocksDB we usually get higher write-amplification and lower space-amplification compared to ForestDB.

The ForestDB database can be sharded into many files per process. I am not sure if transactions can span files. There is only one compaction thread regardless of the number of files and during my tests it was easy for compaction to fall behind. Single-threaded compaction might be stalled by file reads, decompression after file reads and compression before file writes. I think that for every key read during compaction, the index must be checked to confirm the key is live, so this can be another reason for compaction to fall behind. I assume work can be done to make this better.

ForestDB benchmark client

I forked the ForestDB benchmark client. When I get more time I will try to push changes upstream. Here I describe changes I made to it using the git commit log:

- e30129 - link with tcmalloc to get better performance and change the library link order

- 1c4ecb - accept benchmark config filename on the command line

- 3b3a3c - add population:compaction_wait option to determine whether to wait for IO to settle after the load. Their benchmark results include some discussion of whether the test should wait for IO to settle after doing a load. While I don't think the test should wait for all IO to stop, I think it is important to penalize an engine that incurs too much IO debt during the load phase which will make queries that follow much slower. This is still work-in-progress for RocksDB although setting the options hard_rate_limit and soft_rate_limit make this much less of an issue.

- aac5ba - add population:load option to determine whether the population (load) step should be done. This replaces a command line flag.

- aa467b - adds the function couchstore_optimize_for_load to let an engine optimize for load performance and the RocksDB version uses the vector memtable for loads and otherwise uses the skiplist memtable. This also adds the function couchstore_set_wal to enable/disable redo logging. Finally this adds a better configuration in all cases for RocksDB. I know that it isn't easy to configure RocksDB. The changes include:

- set max_bytes_for_level_base to 512M. It is 10M by default. This is the size of the L1.

- set target_file_size_base to 32M. It is 2M by default. This is the size of the files used for levels L1 and larger.

- enable level_compaction_dynamic_level_bytes. This is a big improvement to the algorithm for leveled compaction. We need a blog post to explain it.

- set stats_dump_period_sec to 60. The default is 600 and I want more frequent stats.

- set block_size to 8kb. The default is 4kb before compression and I don't want to do ~2kb disk reads. Nor do I want a block with only 4 1kb docs as that means the block index is too large.

- set format_version to 2. The default is 0 and the RocksDB block cache can waste memory when this is 0. This might deserve a blog post from the RocksDB team.

- set max_open_files to 50000. The default is 5000 and I want to cache all database files when possible.

- 3934e4 - set hard_rate_limit to 3.0 and soft_rate_limit to 2.5. The default is to not use rate limits. When set these limit how much IO debt a level can incur and then user writes are throttled when the debt is too large. Debt in this case is when the level has too much data. The size of L1 should be max_bytes_for_level_base. Setting the soft_limit to 2.5 means that writes are delayed when it gets 2.5X of the target size. Setting the hard rate_limit to 3.0 means that writes are stopped when it gets to 3.0X of the target size. We have work-in-progress to make throttling much better as it can lead to intermittent stalls (bad p99) for write-heavy workloads. This also disables compression for levels 0 and 1 in the LSM to reduce the chance of compaction stalls.

- a6f8d8 - this reduces the duration for which a spin lock is held in the benchmark client. I was getting odd hangs while running valgrind and this change fixed the problem. AFAIK the change is correct too.

- cfce06 - use a different RNG seed per thread. Prior to this change all threads start with the same seed and can then generate the same sequence of key values. This can be really bad when trying to get IO-bound databases as it inflates the block cache hit rate. The LevelDB benchmark client has a different version of this problem. It uses a different seed per thread, but the seed per thread is constant. So if you restart the benchmark client then thread N generates the same sequence of keys that it generated on the last run. I fixed this in RocksDB (see the --seed option for db_bench).

- 5a4de1 - add a script to generate benchmark configuration files for different workload patterns. See the next section for a description of the patterns.

- 2b85fa - disable RocksDB WAL when operation:write_type=async is used. I reverted this part of the diff later. This also sets level0_slowdown_writes_trigger to 12 and level0_stop_writes_trigger to 16. The defaults were larger.

- eae3fa - adds a helper script to run the pattern of tests. This is described in Benchmark pattern.

- 871e63, e9909a - ForestDB wasn't ever doing fsync/fdatasync during the load phase. This fixes that. With these changes write-amplification is much larger for ForestDB when periodic_commit is enabled and write_type=sync. These changes provide the following behavior for existing config options:

- population:periodic_commit - for ForestDB when set there is a commit per insert batch and when not set there is a commit at the end of the load. This has not changed. For RocksDB there is always one write batch per insert batch, but when set the WAL is used and when not set the WAL is not used. This does not control whether fsync/fdatasync are used. When periodic_commit is used then a load might be restartable when it fails in the middle. When not used, then a failure means load must start over.

- operation:write_type - when set to sync then fsync/fdatasync are done per commit. This is a change for ForestDB but not for RocksDB.

The script rall.sh runs tests using a pattern that is interesting to me. The pattern is listed below. The first step loads N documents and this takes a variable amount of time. The steps that follow run for a fixed amount of time. All of the tests used uniform random RNG to generate keys. The script also uses "numactl --interleave=all" given that I was using dual socket servers and a lot of RAM in many cases. The script also collects iostat and vmstat to help explain some performance differences.

- Load - this is the populate step. The PK value is hashed from an increasing counter (from 1 to N) so the load isn't in PK order. The test is configured to not wait for IO to settle when the load ends and if the engine has too much IO debt then the tests that follow can suffer.

- Overwrite-sync-1 - uses one writer thread to update docs. It does an fsync on commit.

- Overwrite-sync-N - like Overwrite-sync-1 but uses N writer threads.

- Point-query-1-with-writer - uses 1 reader thread that does point queries and 1 writer thread that updates docs. There is a rate limit for the writer. Read performance with some writes in progress is more realistic than without writes in progress especially for write-optimized database engines like RocksDB. More info on that topic is here.

- Point-query-N-with-writer - like Point-query-1-with-writer but uses N reader threads.

- Range-query-1-with-writer - like Point-query-1-with-writer but does range queries

- Range-query-N-with-writer - like Range-query-1-with-writer but uses N reader threads

- Point-query-1 - like Point-query-1-with-writer, but without a writer thread

- Point-query-N - like Point-query-N-with-writer, but without a writer thread

- Range-query-1 - like Range-query-1-with-writer, but without a writer thread

- Range-query-N - like Range-query-N-with-writer, but without a writer thread

- Overwrite-async-1 - like Overwrite-sync-1 but does not do fsync-on-commit. This was moved to the end of the test sequence because it frequently made compaction get too far behind in ForestDB

- Overwrite-async-N - like Overwrite-sync-N but does not do fsync-on-commit. Also moved to the end like Overwrite-async-1.

Benchmark configuration

My test scripts use a template for the benchmark configuration that is specialized for each run. The gen_config.sh script has a few test-specific changes especially for the load test. Changes that I made from one of the published configurations include:

Benchmark debugging- set db_config:bloom_bits_per_key to 10 to use bloom filters with RocksDB

- set threads:seed_per_thread to 1 to use different RNG seeds per thread

- set body_length:compressibility=50

- use operation:batch_distribution=uniform. Eventually I will try others.

- use operation:batchsize_distribution=uniform with batchsize 1 (read_batchsize_*_bound) for point queries and a configurable batchsize (iterate_batchsize_*_bound) for range queries. In the Benchmark debugging section I will explain why I do this.

I started with an IO-bound configuration and data on disk and ForestDB was getting about 1.5X more QPS than RocksDB for a read-only point-query workload. I had two servers with identical hardware and this result was reproduced on both servers. So it was time to debug. The test used one reader thread, so this result wasn't ruined by using the same RNG seed for all threads.

I started by confirming that the ratio of disk reads per query were similar for RocksDB and ForestDB. Each did about 1.5 queries per disk read courtesy of cached data. However the average disk read latency reported by iostat was about 1.5X larger for RocksDB. Why was the disk faster for ForestDB?

Next I used strace to see the system calls to read blocks. I learned that page reads for data were 4kb and aligned with ForestDB. They were usually less than 4kb and not aligned with RocksDB. Both use buffered IO. From iostat the average read size was 4kb for ForestDB and about 8kb for RocksDB. Many of the unaligned almost 4kb reads crossed alignment boundaries so the unaligned ~4kb read required an 8kb read from disk. This could be a performance problem on a fast SSD but there is not much difference between 4kb and 8kb reads from a disk array and I re-confirmed that via tests with fio. I also used blktrace to get more details about the IO pattern and learned that my servers were using the CFQ IO scheduler. I switched to deadline.

At this point I am stuck. Somehow ForestDB is getting faster reads from disk or the read pattern wasn't as random as I thought it would be. So I read more of the benchmark client and saw that point reads were done in a batch where the key for the first document per batch was selected at random (call it N) and then the keys for the rest of the batch were N+1, N+2, N+3, etc. This gives ForestDB an advantage when only one database file is used because with M files the reads in one batch might use different files. The load and query code both share a function that converts an integer to a key, so N here is an integer and the key is something that includes a hash of the integer. This means that the key for N=3 is not adjacent to the key for N=4. However, the data file for ForestDB is log structured and immediately after the load the data for N=3 is adjacent to the data for N=4 unless updates were done after the load to either of the documents.

At last I understood the cause. ForestDB was benefiting from fetching data that was adjacent in the file. The benefit came from caching either in the HW RAID device or disks. I changed the test configuration to use batchsize=1 for point queries and then ForestDB and RocksDB began to experience the same average read latency from the disk array.

Subscribe to:

Comments (Atom)

HammerDB tproc-c on a small server, Postgres and MySQL

This has results for HammerDB tproc-c on a small server using MySQL and Postgres. I am new to HammerDB and still figuring out how to explai...

-

I need stable performance from the servers I use for benchmarks. I also need servers that don't run too hot because too-hot servers caus...

-

This has results to measure the impact of calling fsync (or fdatasync) per-write for files opened with O_DIRECT. My goal is to document the ...

-

I previously used math to explain the number of levels that minimizes write amplification for an LSM tree with leveled compaction. My answe...