With help from a Postgres expert (Peter Geoghegan) I was able to confirm there wasn't a performance regression for Postgres 14 in a few of the benchmark steps with the Insert Benchmark as I started to report on in a previous blog post. The results here are from a small server for both cached and IO-bound workloads and replace my previous blog posts (cached, IO-bound).

The reason for the false alarm is that index cleanup was skipped during vacuum starting with Postgres 14 and the impact is that the optimizer had more work to do (more not-visible index entries to skip) in the get_actual_variable_range function. Output like this from the vacuum command makes that obvious:

table "pi1": index scan bypassed: 48976 pages from table (0.62% of total) have 5000000 dead item identifiers

The problem is solved by adding INDEX_CLEANUP ON to the vacuum command.

tl;dr

- All results here are from a low-concurrency workload on a small server (1 client, <= 3 connections, 8 cores). Results from a bigger server are pending.

- For cached workloads throughput improves a lot on all benchmark steps except point query (qp*) where from Postgres 9.0 to 16 it is slightly better on the SER4 servers and then stable to slightly slower on the SER7 server.

- For IO-bound workloads on the SER4 server from Postgres 9.0 through 16 the results are similar to the cache workload -- things improve a lot for all benchmark steps except point query (qp*).

- For IO-bound workloads on the SER7 server from Postgres 12 through 16 the throughput for range and point queries (qr*, qp*) are stable while for write-heavy there are some regressions. Also there is a ~5% increase in CPU/operation from Postgres 12 through 16 on the random-write benchmark steps (l.i1, l.i2).

- For IO-bound workloads with the SER4 server the benchmark steps were unable to sustain the target write rates during the qr1000 and qp1000 benchmark steps (1000 inserts/s + 1000 deletes/s) for many of the Postgres versions. This was an issue on the SER7 server that has a faster CPU and better RAM / data ratio.

- The delete/s rate is between 5X and 20X larger for the l.i1 benchmark step vs the l.i2 step. The issue is that l.i1 deletes 10X more rows/statement and the impact of the optimizer CPU overhead is much worse during l.i2 -- see my comments below about the weird workload.

Update - I have claimed that InnoDB and MyRocks don't have this problem and that is more truthy than true because they had a problem from MVCC GC getting behind, but the problem shows up on a SELECT statement while Postgres has the problem with a DELETE statement.

See here for details.

Editorial

At a high-level there are several issues:

- The workload that triggers the issue is weird (uncommon)

- With MVCC GC in Postgres, garbage can remain in indexes for a while

- The Postgres optimizer can use too much CPU time in get_actual_variable_range to help figure out index selectivity even when there is only one good index for a SQL statement

First, the workload that triggers the issue is weird. I hope to rewrite the Insert Benchmark later this year to be less weird. The weirdness is that last year I enhanced the Insert Benchmark to optionally delete from tables at the same rate as inserts to keep the tables from growing too big. A too big table might make my tests fail when a disk is full. And a too big table means I can't run the cached (in-memory) variant of the test for longer periods of time. But the problem is the enhancements meant I added statements like DELETE FROM foo WHERE pk_column > $a and < $b. The constants $a and $b are usually in or even less than the histogram bucket with the smallest value for the column which means that get_actual_variable_range then tries to read from the index to determine the current minimum value for that index.

Second, with MVCC GC in Postgres, garbage can remain in indexes for longer than I want it to.

- MVCC GC in InnoDB is called purge and is running all of the time -- although it can be slowed by read IO latency and temporarily halted when there is a long-open snapshot. But most of the time InnoDB will cleanup (remove non-visible index entries) soon after transaction commit.

- Cleanup with Postgres has more lag. It can be done by vacuum with a lag of many hours. It can also be done by simple index deletion but only on page splits and my workload doesn't trigger page splits on DELETE. Perhaps the existing code can be updated to also trigger simple index deletion when an index leaf page is mostly or all non-visible entries. I risk writing nonsense about Postgres in this regard, and for better information see this video from Peter and this document page.

Third, the Postgres optimizer can use too much CPU time in get_actual_variable_range. I don't mind that get_actual_variable_range exists because it is useful for cases where index statistics are not current. But the problem is that for the problematic SQL statement (see the DELETE above and this blog post) there is only one good index for the statement. So I prefer the optimizer not do too much work in that case. I have experienced this problem a few times with MySQL. One of the fixes from upstream MySQL was to change the optimizer to do less work when there was a FORCE INDEX hint. And with some OLTP workloads where the same statements are so frequent I really don't want the optimizer to use extra CPU time. For the same reason, I get much better throughput from Postgres when prepared statements are enabled and now I always enable them for the range and point queries with Postgres during the insert benchmark, but not for MySQL (because they don't help much with MySQL).

Build + Configuration

See the

previous report for more details. In all but one case (IO-bound on SER7) I tested these versions: 9.0.23, 9.1.24, 9.2.24, 9.3.25, 9.4.26, 9.5.25, 9.6.24, 10.23, 11.22, 12.17, 13.13, 14.10, 15.5, 16.1. For IO-bound on SER7 tests are from Postgres 12 through 16.

The configuration files are in subdirectories

from here. Search for files named

conf.diff.cx9a2_bee and

conf.diff.cx9a2_ser7 which exist for each major version of Postgres

.

The Benchmark

The benchmark is run with one client.

There are two test servers and the SER7 has a faster CPU. More info on the servers

is here:

- Beelink SER4 with 8 AMD cores, 16G RAM, Ubuntu 22.04 and XFS using 1 m.2 device

- Beelink SER7 with 8 AMD cores, 32G RAM, Ubuntu 22.04 and XFS using 1 m.2 device

The benchmark steps are:

- l.i0

- insert X million rows per table in PK order. The table has a PK index but no secondary indexes. There is one connection per client. With a cached workload the value of X is 30M for SER4 and 60M for SER7. With an IO-bound workload X is 800M for both because I forgot to make it larger for SER7.

- l.x

- create 3 secondary indexes per table. There is one connection per client.

- l.i1

- use 2 connections/client. One inserts XM rows and the other does deletes at the same rate as the inserts. Each transaction modifies 50 rows (big transactions). This step is run for a fixed number of inserts, so the run time varies depending on the insert rate. With a cached workload the value of X is 40M. With an IO-bound workload X is 4M.

- l.i2

- like l.i1 but each transaction modifies 5 rows (small transactions) and inserts XM rows total. With a cached workload the value of X is 10M. With an IO-bound X is 1M.

- Vacuum the test table, do a checkpoint and wait ~Y seconds to reduce variance during the read-write benchmark steps that follow. The value if Y is based on the size of the table.

- qr100

- use 3 connections/client. One does range queries for Z seconds and performance is reported for this. The second does does 100 inserts/s and the third does 100 deletes/s. The second and third are less busy than the first. The range queries use covering secondary indexes. This step is run for a fixed amount of time. If the target insert rate is not sustained then that is considered to be an SLA failure. If the target insert rate is sustained then the step does the same number of inserts for all systems tested. For cached workloads the value of Z was 1800. For IO-bound on SER4 it was 1800 and on SER7 it was 7200.

- qp100

- like qr100 except uses point queries on the PK index

- qr500

- like qr100 but the insert and delete rates are increased from 100/s to 500/s

- qp500

- like qp100 but the insert and delete rates are increased from 100/s to 500/s

- qr1000

- like qr100 but the insert and delete rates are increased from 100/s to 1000/s

- qp1000

- like qp100 but the insert and delete rates are increased from 100/s to 1000/s

Results

The performance reports are here for:

The summary has 3 tables. The first shows absolute throughput by DBMS tested X benchmark step. The second has throughput relative to the version on the first row of the table. The third shows the background insert rate for benchmark steps with background inserts and all systems sustained the target rates. The second table makes it easy to see how performance changes over time.

Below I use relative QPS to explain how performance changes. It is: (QPS for $me / QPS for $base) where $me is my version and $base is the version of the base case. When relative QPS is > 1.0 then performance improved over time. When it is < 1.0 then there are regressions. The Q in relative QPS measures:

- insert/s for l.i0, l.i1, l.i2

- indexed rows/s for l.x

- range queries/s for qr100, qr500, qr1000

- point queries/s for qp100, qp500, qp1000

Below I use colors to highlight the relative QPS values with red for <= 0.95, green for >= 1.05 and grey for values between 0.95 and 1.05.

- The base case is pg9023_def which means Postgres 9.0.23

- For the benchmark steps

- l.i0 - improves in Postgres 9.4 and 11.22 and then is stable

- l.x - improves in Postgres 9.6 and then is stable

- l.i1, l.i2 - improves in Postgres 12 through 14

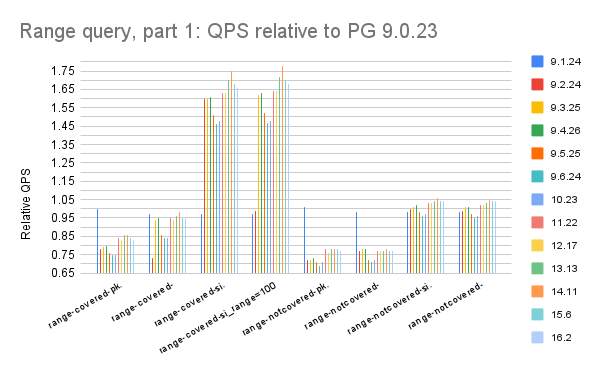

- qr100, qr500, qr1000 - slow but steady improvements from Postgres 9.2 through 16

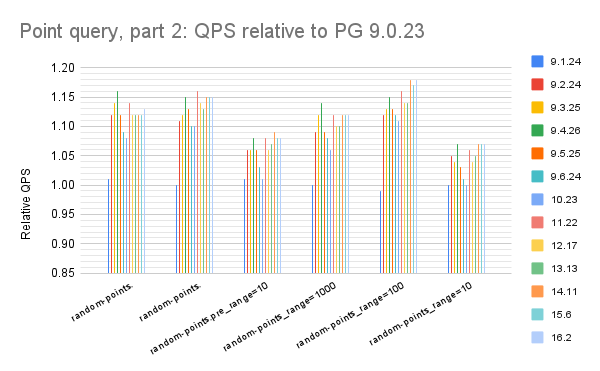

- qp100, qp500 - slow but steady improvements from Postgres 9.2 through 16

- qp1000 - stable from Postgres 9 through 16. Perhaps this is most affected by the CPU overhead from get_actual_variable_range.

- Comparing throughput in Postgres 16.2 to 9.0.23

- Write-heavy

- l.i0, l.x, l.i1, l.i2 - relative QPS is 1.22, 1.79, 3.38, 2.36

- Range queries

- qr100, qr500, qr1000 - relative QPS is 1.20, 1.19, 1.23

- Point queries

- qp100, qp500, qp1000 - relative QPS is 1.08, 1.10, 1.01

- The base case is pg9023_def which means Postgres 9.0.23

- For the benchmark steps

- l.i0 - improves in Postgres 9.4 and 11.22 and then is stable

- l.x - improves in Postgres 9.6 and 10 and then is stable

- l.i1, l.i2 - improves in Postgres 12 through 14

- qr100, qr500, qr1000 - improves in Postgres 9.2 and then is stable or slowly improving

- qp100, qp500, qp1000 - improves in Postgres 9.2 through 9.5 and then slowly gets worse

- Comparing throughput in Postgres 16.2 to 9.0.23

- Write-heavy

- l.i0, l.x, l.i1, l.i2 - relative QPS is 1.34, 1.65, 3.12, 2.42

- Range queries

- qr100, qr500, qr1000 - relative QPS is 1.44, 1.62, 1.62

- Point queries

- qp100, qp500, qp1000 - relative QPS is 1.01, 0.92, 0.99

- The base case is pg9023_def which means Postgres 9.0.23

- For the benchmark steps

- l.i0 - improves in Postgres 11.22 and then is stable

- l.x - improves in Postgres 9.4 through 10 and then is stable

- l.i1, l.i2 - improves in Postgres 12 and then is stable

- qr100, qr500, qr1000 - slowly improves from Postgres 9.2 though 11 and then is stable

- qp100, qp500, qp1000 - same as qr100, qr500, qr1000

- Comparing throughput in Postgres 16.2 to 9.0.23

- Write-heavy

- l.i0, l.x, l.i1, l.i2 - relative QPS is 1.21, 2.29, 1.85, 1.85

- Range queries

- qr100, qr500, qr1000 - relative QPS is 1.15, 1.23, 1.36

- Point queries

- qp100, qp500, qp1000 - relative QPS is 0.99, 1.00, 0.98

- I only have results from Postgres 12 though 16

- The read-write benchmark steps were run for 7200s vs 1800s above

- Looking at write rates over time for the l.i2 benchmark step where write is insert/s and delete/s the rates are ~195/s at the start of the benchmark step and ~155/s at the end. I assume the issue is there is more garbage (non-visible index entries) in the PK index over time so there is more CPU overhead from get_actual_variable_range having to read and skip them while figuring out the minimum visible value in the index during DELETE statements.

- The base case is pg1217_def which means Postgres 12.17. The improvements show here don't match the results above for IO-bound on the SER4 server because it uses an older (9.0) base case

- For the benchmark steps

- l.i0 - throughput is stable

- l.x - throughput slowly improves from Postgres 13 through 16

- l.i1, l.i2 - with some variance, throughput gets worse from Postgres 12 through 16. From vmstat results normalized by write rates I see a 4% to 7% increase in CPU/operation on SER7. If I limit myself to Postgres 12.17 through 16 then I also see a 5% to 8% increase on SER4.

- qr100, qr500, qr1000 - throughput is stable

- qp100, qp500, qp1000 - throughput is stable

- Comparing throughput in Postgres 16.2 to 9.0.23

- Write-heavy

- l.i0, l.x, l.i1, l.i2 - relative QPS is 1.00, 1.14, 0.93, 0.88

- Range queries

- qr100, qr500, qr1000 - relative QPS is 1.02, 1.00, 1.00

- Point queries

- qp100, qp500, qp1000 - relative QPS is 0.98, 0.98, 0.98

Target write rates

The third table in the summaries linked above show the write rates sustained during the read-write benchmark steps. The target write rates are 100/s for qr100 and qp100, 500/s for qr500 and qp500 and then 1000/s for qr1000 and qp1000. Note that X/s means X inserts/s and X delete/s. When the value is close enough to the target then I assume the target has been sustained. The table cells in red indicate the cases where the target has not been sustained.

For cached workloads all versions sustained the target write rates.

For IO-bound workloads

- Note that SER4 and SER7 had the same amount of data, but SER7 has twice as much RAM so it was less IO-bound. And SER7 has a faster CPU.

- With SER4

- Postgres 9.x, 10 and 11 did not sustain the target write rates during qr1000 and qp1000

- Postgres 13, 14, 15.5 and 16 did not sustain the target write rates during qp1000 but they were close to the target

- With SER7

- All versions sustained the target write rates with SER7.