I previously tweeted about the performance improvements from the hyper clock cache for RocksDB. In this post I provide more info about my performance tests. This feature is new in RocksDB 7.7.3 and you can try it via db_bench --cache_type=hyper_clock_cache ....

The hyper clock cache feature implements the block cache for RocksDB and is expected to replace the the LRU cache. As you can guess from the name, the hyper clock cache implements a variant of CLOCK cache management. The LRU implementation is sharded with a mutex per shard. Even with many shards, I use 64, there will be hot shards and mutex contention. The hyper clock cache avoids those problems, but I won't try to explain it here because I am not an expert on it.

At the time of writing this, nobody has claimed to be running this feature in production and the 7.7.3 release is new. I am not throwing shade at the feature, but it is new code and new DBMS code takes time to prove itself in production.

Experiments

My first set of results are from a server with 2 sockets, 80 HW threads and 40 cores where hyperthreading was enabled. My second set of results are from a c2-standard-60 server in the Google cloud with 30 cores and hyperthreading disabled. The results from both are impressive.

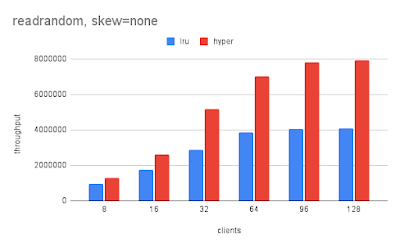

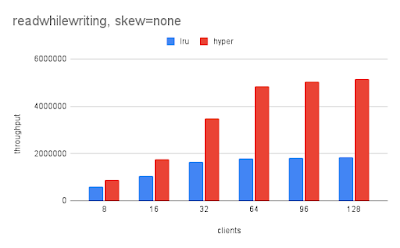

For the server with 80 HW threads the benchmarks were repeated at 8, 16, 32, 64, 96 and 128 clients. For the c2-standard-60 server the benchmarks were repeated at 8, 24 and 48 clients. The goal was to run benchmarks for the CPU where it was: not saturated, saturated, oversubscribed. The database was cached by RocksDB in all cases.

I used a fork of benchmark.sh that is here and my fork repeats the test with 3 types of skew:

- no skew - key accessed have a uniform distribution

- 64.512 skew - point (range) queries only access the first 64 (512) key-value pairs

- 8.64 skew - point (range) queries only access the first 8 (64) key-value pairs

- readrandom - N clients do point queries

- fwdrange - N clients do range queries. Each range query fetches 10 key-value pairs.

- readwhilewriting - like readrandom, but has an extra client that does writes

- fwdrangewhilewriting - like fwdrange, but has an extra client that does writes

The results are impressive even for the workloads without skew.

Very nice work rocksdb team. Thanks Mark for the update . Is it possible to show results also with less shards for the cache (sharding may be redundant in clock cache)

ReplyDeleteI will consider that when I revisit the hyper clock cache in a future round of benchmarks.

Delete