This post has results for vector index support in MariaDB and Postgres. This work was done by Small Datum LLC and sponsored by the MariaDB Corporation. This is part 2 in a series that compares QPS and recall for the fashion-mnist-784-euclidean dataset using from 1 to 48 concurrent sessions on a large server. This is part 2 and part 1 is here.

The purpose of this post is to explain the results I shared in part 1 where MariaDB does much better than pgvector at low and high concurrency but the performance gap isn't as large at medium concurrency where low means <= 4 concurrent sessions, medium means 8 to 20 and high means >= 24.

tl;dr

- QPS is ~1.4X larger for MariaDB than for pgvector at 2 and 48 concurrent sessions

- pgvector uses more CPU/query than MariaDB

- MariaDB does more context switches /query than pgvector

- MariaDB appears to use less CPU to compute euclidean distance

Benchmark

For MariaDB:

- queries use ORDER by vec_distance_euclidean

- create index uses DISTANCE=euclidean

For Postgres

- queries use ORDER BY embedding::halfvec(...) <-> $name::halfvec(...)

- create index uses USING hnsw ((embedding::halfvec(...)) halfvec_l2_ops

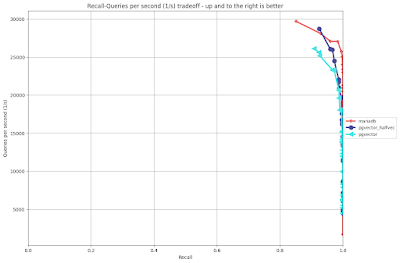

Results: QPS by concurrency

The charts in this section show QPS by concurrency level as the benchmark was repeated for 1 to 48 concurrent sessions (X concurrent sessions means X concurrent queries).

The charts come from this spreadsheet. All of the data from the benchmark is here and the data I scraped to make these charts is here. I used configurations that provide a recall of ~0.96.

- MariaDB - ef_search=10, M=6

- Postgres - ef_search=10, M=16, ef_construction=32

This chart has absolute QPS for each of the systems tested.

This chart has relative QPS which is: (QPS for Postgres / QPS for MariaDB).

- The MariaDB advantage is larger at low and high concurrency.

- The MariaDB advantage isn't as large between 8 and 20 concurrent sessions

And this table also has relative QPS.

The MariaDB advantage is larger at low and high concurrency.The MariaDB advantage isn't as large between 8 and 20 concurrent sessions

| pgvector.halfvec | pgvector |

| 1 | 0.60 | 0.57 |

| 2 | 0.71 | 0.69 |

| 4 | 0.78 | 0.72 |

| 8 | 0.89 | 0.83 |

| 12 | 0.82 | 0.79 |

| 16 | 0.98 | 0.86 |

| 20 | 0.96 | 0.85 |

| 24 | 0.82 | 0.81 |

| 28 | 0.85 | 0.79 |

| 32 | 0.90 | 0.86 |

| 36 | 0.71 | 0.76 |

| 40 | 0.84 | 0.75 |

| 44 | 0.70 | 0.65 |

| 48 | 0.73 | 0.64 |

This table has (QPS / concurrency). For all systems tested the QPS per session decreases as the concurrency increases. I suspect the benchmark client is part of the problem but I am just speculating

- multiprocessing.pool is used by both MariaDB and pgvector, which is good, less GIL. See here for MariaDB and for pgvector.

- the benchmark client includes all of the time to process queries, including

- creating & start multiprocessing.pool - perhaps the pool can be cached & reused across runs

- creating a database connection

- gathering results from the concurrent sessions - some of this is done in the main thread

- AFAIK, the total number of queries per run is fixed, so the number of queries per session is less when there are more concurrent sessions and setup overhead (create database connection, create multiprocessing.pool, process results) becomes more significant as the concurrency level increases.

| MariaDB | pgvector.halfvec | pgvector |

| 1 | 4639.6 | 2803.5 | 2661.3 |

| 2 | 3376.4 | 2405.6 | 2327.3 |

| 4 | 2869.2 | 2226.8 | 2076.0 |

| 8 | 2149.6 | 1922.2 | 1784.0 |

| 12 | 1858.1 | 1522.2 | 1471.8 |

| 16 | 1527.5 | 1498.3 | 1310.5 |

| 20 | 1353.1 | 1303.1 | 1156.3 |

| 24 | 1261.8 | 1039.8 | 1023.5 |

| 28 | 1062.7 | 906.6 | 839.5 |

| 32 | 909.0 | 814.8 | 784.9 |

| 36 | 918.5 | 649.0 | 702.5 |

| 40 | 783.2 | 659.8 | 589.9 |

| 44 | 745.8 | 518.9 | 482.5 |

| 48 | 680.1 | 495.9 | 435.3 |

Performance debugging

The benchmark client does a lot of work (like checking results for recall) which means there is a brief burst of CPU overhead when queries run followed by longer periods where the benchmark client is processing the results. So I modified the benchmark client to only run queries in a loop and avoid other overheads like checking the results for recall. This makes it easier to collect performance data like CPU profiles (perf), PMP stacks and vmstat samples.

Performance debugging: MariaDB

From a test with 2 concurrent sessions the perf profile shows that much CPU is used to compute the dot product which is used to determine the distance between vectors:

# Overhead Command Shared Object Symbol

16.89% one_connection mariadbd.orig [.] FVector::dot_product

4.71% one_connection mariadbd.orig [.] escape_string_for_mysql

3.42% one_connection mariadbd.orig [.] search_layer

2.99% one_connection mariadbd.orig [.] buf_page_get_gen

2.31% one_connection mariadbd.orig [.] my_charlen_utf8mb4

2.16% one_connection mariadbd.orig [.] MYSQLparse

2.03% one_connection libc.so.6 [.] __memmove_avx512_unaligned_erms

1.74% one_connection mariadbd.orig [.] PatternedSimdBloomFilter<FVectorNode>::Query

1.58% one_connection libm.so.6 [.] __roundf

1.49% one_connection mariadbd.orig [.] mtr_memo_slot_t::release

1.40% one_connection mariadbd.orig [.] mhnsw_read_first

1.32% one_connection mariadbd.orig [.] page_cur_search_with_match

1.09% one_connection libc.so.6 [.] __memcmp_evex_movbe

1.06% one_connection mariadbd.orig [.] FVectorNode::distance_to

1.03% one_connection mariadbd.orig [.] row_search_mvcc

0.98% one_connection mariadbd.orig [.] rec_get_offsets_func

0.93% one_connection mariadbd.orig [.] cmp_data

0.93% one_connection mariadbd.orig [.] alloc_root

0.75% one_connection mariadbd.orig [.] Visited::cmp

And then the result with 48 concurrent sessions

- the percentage of time in dot_product was ~17% above, but only ~11.5% here

- more time is spent in InnoDB functions like buf_page_get_gen, mtr_memo_slot_t::release, page_cur_search_with_match, btr_cur_t_::search_leaf, ssux_lock::psd_read_lock, rec_get_offsets_func and buf_page_make_young_if_needed. Some of that might be expected but that can also be a sign of too much mutex contention in InnoDB.

- I don't see signs of mutex contention in PMP output

# Overhead Command Shared Object Symbol

11.49% one_connection mariadbd.orig [.] FVector::dot_product

7.17% one_connection mariadbd.orig [.] buf_page_get_gen

4.44% one_connection mariadbd.orig [.] search_layer

4.00% one_connection mariadbd.orig [.] escape_string_for_mysql

2.49% one_connection mariadbd.orig [.] mtr_memo_slot_t::release

2.23% one_connection mariadbd.orig [.] page_cur_search_with_match

1.86% one_connection mariadbd.orig [.] MYSQLparse

1.85% one_connection mariadbd.orig [.] my_charlen_utf8mb4

1.75% one_connection libc.so.6 [.] __memmove_avx512_unaligned_erms

1.60% one_connection mariadbd.orig [.] btr_cur_t::search_leaf

1.38% one_connection mariadbd.orig [.] ssux_lock::psi_rd_lock

1.37% one_connection mariadbd.orig [.] mhnsw_read_first

1.32% one_connection mariadbd.orig [.] FVectorNode::distance_to

1.23% one_connection mariadbd.orig [.] rec_get_offsets_func

1.19% one_connection mariadbd.orig [.] PatternedSimdBloomFilter<FVectorNode>::Query

1.02% one_connection mariadbd.orig [.] FVectorNode::load

0.97% one_connection libm.so.6 [.] __roundf

0.88% one_connection mariadbd.orig [.] buf_page_make_young_if_needed

0.83% one_connection mariadbd.orig [.] cmp_dtuple_rec_with_match_low

From vmstat with 2 concurrent sessions

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

2 0 96784 89703408 1982284 34588692 0 0 0 70 1401 56743 3 1 95 0 0

2 0 96784 89703408 1982284 34588696 0 0 0 9 1356 56588 4 1 96 0 0

2 0 96784 89703408 1982284 34588696 0 0 0 0 1434 56755 3 1 95 0 0

2 0 96784 89703408 1982284 34588696 0 0 0 0 1340 56629 4 1 96 0 0

2 0 96784 89702672 1982288 34588700 0 0 0 86 1810 56874 4 1 95 0 0

From vmstat with 48 concurrent sessions

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

58 0 96784 89428544 1988284 34733232 0 0 0 290 27883 637320 80 20 0 0 0

56 0 96784 89430560 1988284 34733236 0 0 0 0 28393 639018 81 19 0 0 0

52 0 96784 89430560 1988284 34733236 0 0 0 0 28240 638914 80 20 0 0 0

59 0 96784 89430560 1988284 34733236 0 0 0 0 33141 642055 80 20 1 0 0

56 0 96784 89430560 1988284 34733236 0 0 0 0 29520 639747 81 19 0 0 0

Comparing the vmstat results for 2 vs 48 sessions

- CPU/query is (vmstat.us + vmstat.sy) / QPS

- For 2 sessions it is ((4+5+4+5+5) / 5) / 6752.7 = .000681

- For 48 sessions it is ((100+100+100+100+100)/5) / 32645.5 = .003063

- CPU/query is ~4.5X larger at 48 sessions

- Context switches /query is vmstat.cs / QPS

- For 2 sessions it is 56743 / 6752.7 = 8.40

- For 48 sessions it is 637320 / 32645.5 = 19.52

- Context switches /query is ~2.3X larger at 48 sessions

Performance debugging: pgvector with halfvec

From a test with 2 concurrent sessions the perf profile shows

- computing L2 distance accounts for the most time, here it is 25.98% while above for MariaDB at 2 concurrent sessions it was 16.89%. Perhaps MariaDB is faster at computing L2 distance, perhaps MariaDB has more overhead elsewhere to reduce the fraction of time in computing L2 distance. But I suspect that the former is true.

- Postgres here appears to have more CPU overhead than MariaDB in accessing the data (PinBuffer, LWLock, etc)

# Overhead Command Shared Object Symbol

25.98% postgres vector.so [.] HalfvecL2SquaredDistanceF16c

9.68% postgres postgres [.] PinBuffer

6.46% postgres postgres [.] hash_search_with_hash_value

5.39% postgres postgres [.] LWLockRelease

4.25% postgres postgres [.] pg_detoast_datum

3.95% postgres vector.so [.] vector_to_halfvec

3.06% postgres postgres [.] LWLockAttemptLock

2.97% postgres postgres [.] LWLockAcquire

2.89% postgres vector.so [.] HnswLoadUnvisitedFromDisk

2.44% postgres postgres [.] StartReadBuffer

1.82% postgres postgres [.] GetPrivateRefCountEntry

1.70% postgres vector.so [.] tidhash_insert_hash_internal

1.65% postgres postgres [.] LockBuffer

1.59% postgres vector.so [.] HnswLoadElementImpl

0.88% postgres libc.so.6 [.] __memcmp_evex_movbe

0.80% postgres postgres [.] ItemPointerEquals

0.79% postgres postgres [.] ResourceOwnerForget

0.71% postgres vector.so [.] HnswSearchLayer

0.64% postgres postgres [.] pq_getmsgfloat4

And then from a test with 48 concurrent sessions

- The fraction of time computing L2 distance here is less than it is for 2 sessions above. This is similar to the results for MariaDB.

- From PMP I don't see signs of mutex contention, but I only took 3 samples

# Overhead Command Shared Object Symbol

19.86% postgres vector.so [.] HalfvecL2SquaredDistanceF16c

10.89% postgres postgres [.] PinBuffer

6.78% postgres postgres [.] hash_search_with_hash_value

5.44% postgres postgres [.] LWLockRelease

5.25% postgres postgres [.] LWLockAttemptLock

4.97% postgres postgres [.] pg_detoast_datum

3.30% postgres vector.so [.] vector_to_halfvec

2.62% postgres vector.so [.] HnswLoadUnvisitedFromDisk

2.61% postgres postgres [.] StartReadBuffer

2.03% postgres postgres [.] GetPrivateRefCountEntry

1.70% postgres postgres [.] LockBuffer

1.69% postgres vector.so [.] HnswLoadElementImpl

1.49% postgres postgres [.] LWLockAcquire

1.28% postgres vector.so [.] tidhash_insert_hash_internal

0.79% postgres postgres [.] ReadBufferExtended

0.78% postgres postgres [.] AllocSetAlloc

0.76% postgres libc.so.6 [.] __memcmp_evex_movbe

0.65% postgres postgres [.] ResourceOwnerForget

0.63% postgres vector.so [.] HnswSearchLayer

From vmstat with 2 concurrent sessions

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

2 0 96784 90724048 1997176 36391152 0 0 0 80 4188 24830 4 0 96 0 0

2 0 96784 90724304 1997176 36391152 0 0 0 9 4079 24931 4 0 96 0 0

2 0 96784 90724304 1997176 36391152 0 0 0 210 4117 24857 4 0 96 0 0

2 0 96784 90724048 1997176 36391152 0 0 0 42 4160 24946 4 0 96 0 0

2 0 96784 90723576 1997176 36391152 0 0 0 51 4511 24971 4 1 96 0 0

From vmstat with 48 concurrent sessions

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

88 0 96784 90323440 2001256 36414792 0 0 0 0 13129 224133 92 8 0 0 0

86 0 96784 90323440 2001256 36414796 0 0 0 197 13011 223926 92 8 0 0 0

85 0 96784 90322936 2001256 36414796 0 0 0 48 13118 224239 92 8 0 0 0

83 0 96784 90322688 2001264 36414800 0 0 0 56 13084 223987 92 8 0 0 0

86 0 96784 90324952 2001264 36414800 0 0 0 17 13361 224189 92 8 0 0 0

Comparing the vmstat results for 2 vs 48 sessions

- QPS is ~1.4X larger for MariaDB at 2 and 48 sessions

- CPU/query is (vmstat.us + vmstat.sy) / QPS

- For 2 sessions it is ((4+4+4+4+5)/5) / 4811.2 = .000872

- For 48 sessions it is ((100+100+100+100+100)/5) / 23802.6 = .004201

- CPU/query is ~4.8X larger at 48 sessions than at 2

- CPU/query for Postgres is ~1.3X larger than MariaDB at 2 sessions and ~1.4X larger at 48

- Context switches is vmstat.cs / QPS

- For 2 sessions it is 24830 / 4811.2 = 5.16

- For 48 sessions it is 224133 / 23802.6 = 9.41

- Context switches /query is ~1.8X larger at 48 sessions

- Context switches /query for MariaDB is ~1.6X larger than Postgres at 2 sessions and ~2.1X larger at 48