This has results from the sysbench benchmark for MariaDB and MySQL on a (less) small server with a cached and low-concurrency workload. For MariaDB I tested LTS releases from 10.2 through 11.4. For MySQL I tested 5.6, 5.7 and 8.0. The results from MySQL here are a good reason to use changepoint detection to spot regressions early, like that provided by Nyrkiö.

This work was done by Small Datum LLC and sponsored by the MariaDB Foundation. A previous post shared results from my smallest and oldest server. This has results from two newer and faster, but still small, servers. Regardless, the results don't change.

My standard disclaimer is that sysbench with low-concurrency is great for spotting CPU regressions. However, a result with higher concurrency from a larger server is also needed to understand things. Results from IO-bound workloads and less synthetic workloads are also needed. But low-concurrency, cached sysbench is a great place to start.

tl;dr

- MariaDB is great at avoiding CPU regressions over time

- MySQL is less than great at avoiding CPU regressions over time

- Modern MariaDB is 13% to 36% faster than modern MySQL (on this setup)

- Enabling the InnoDB change buffer does not improve results here. It might help for IO-bound sysbench.

- Modern MariaDB does reads from storage for the redo log (read-modify-write) while MySQL does not. I know the MariaDB redo log architecture has changed from MySQL -- MariaDB uses just one large redo log file. But I can't fully explain this.

Builds and configuration

For MariaDB I used the latest point releases from LTS versions: 10.2.44, 10.3.39, 10.4.33, 10.5.24, 10.6.17, 10.11.7 and the upcoming LTS 11.4.1.

For MySQL I used 5.6.51, 5.7.44 and 8.0.36.

The first GA release for MariaDB 10.2 was in 2017. The first GA release for MySQL 5.6 was in 2013. So while I cannot claim that my testing covers MySQL and MariaDB from the same time period, I can claim that I am testing old versions of both.

Everything was compiled from source with similar CMake command lines and CMAKE_BUILD_TYPE set to Release. It is much easier to compile older MariaDB releases than older MySQL releases. For MariaDB I did not have to edit any source files. Notes on compiling MySQL are here

for 5.6,

for 5.6 and 5.7,

for 5.7 and

for 8.0. A note on using cmake is

here.

The my.cnf files are here for

the ser4 and the

ser7 servers. Note that the ser7 server has a faster CPU and twice as much RAM.

Benchmarks

I used sysbench and my usage is

explained here. There are 42 microbenchmarks and each tests ~1 type of SQL statement.

Tests were run on two variants of the small servers I have at home. Each has 8 cores and NVMe SSD with XFS and Ubuntu 22.04. The servers are

described here.

- ser4 - this is the v4 server here with 16G of RAM and has a Ryzen 7 4700U CPU. It is a Beelink SER 4700U so I call it the ser4 (or SER4).

- ser7 - this is the v5 server here with 32G of RAM and has a Ryzen 7 7840HS CPU. It is a Beelink SER7 so I call it the ser7 (or SER7).

The benchmark is run with:

- one connection

- 30M rows and a database cached by InnoDB

- each microbenchmark runs for 300 seconds if read-only and 600 seconds otherwise

- prepared statements were enabled

The command line was: bash r.sh 1 30000000 300 600 nvme0n1 1 1 1

Results

For the results below I split the 42 microbenchmarks into 5 groups -- 2 for point queries, 2 for range queries, 1 for writes. For the range query microbenchmarks, part 1 has queries that don't do aggregation while part 2 has queries that do aggregation. The spreadsheet with all data and charts are here

for ser4 and

for ser7.

All of the charts have relative throughput on the y-axis where that is (QPS for $me) / (QPS for $base), $me is some DBMS version (for example MariaDB 11.4.1) and $base is the DBMS version for the base case. The base version is specified below depending on what I am comparing. The y-axis doesn't start at 0 to improve readability. When the relative throughput is > 1 then the throughput on some DBMS version is greater than the throughput for the base case.

The legend under the x-axis truncates the names I use for the microbenchmarks and I don't know how to fix that other than sharing links (see above) to the Google Sheets I used.

Results: MariaDB from old to new

This section uses MariaDB 10.2.44 as the base version and then compares that with MariaDB versions 10.3.39, 10.4.33, 10.5.24, 10.6.17, 10.11.7 and 11.4.1. The goal is to determine how throughput (QPS) changes from older releases like 10.2 to the latest release (11.4).

These tables have summary statistics from ser4 and ser7 of the relative throughput for MariaDB 11.4.1 vs 10.2.44 for each of the microbenchmark groups. A value greater than one means the throughput for MariaDB 11.4.1 is better than for 10.2.44. From the results here, new MariaDB (11.4.1) gets at least 91% with ser4 and 93% with ser7 of the throughput relative to old MariaDB (10.2.44) using the median relative throughput per microbenchmark group. New features added to MariaDB don't get in the way of performance because there aren't significant regressions over time.

| from ser4 | point, part 1 | point, part 2 | range, part 1 | range, part 2 | writes |

| average | 0.96 | 0.95 | 0.99 | 0.96 | 0.91 |

| median | 0.96 | 0.97 | 0.99 | 0.94 | 0.91 |

| min | 0.90 | 0.91 | 0.93 | 0.88 | 0.77 |

| max | 0.98 | 0.98 | 1.11 | 1.07 | 1.03 |

| stddev | 0.02 | 0.03 | 0.05 | 0.09 | 0.07 |

| from ser7 | point, part 1 | point, part 2 | range, part 1 | range, part 2 | writes |

| average | 0.94 | 0.94 | 0.95 | 0.98 | 0.92 |

| median | 0.96 | 0.96 | 0.93 | 0.96 | 0.95 |

| min | 0.87 | 0.88 | 0.90 | 0.93 | 0.76 |

| max | 0.97 | 0.99 | 1.10 | 1.04 | 1.02 |

| stddev | 0.03 | 0.05 | 0.06 | 0.04 | 0.08 |

There are two graphs per microbenchmark group - first for ser4 and second for ser7. The y-axis doesn't begin at zero to improve readability. The y-axis starts at 0.75 for ser4 and 0.60 for ser7.

Graphs for point queries, part 2.

Graphs for range queries, part 1.

Graphs for range queries, part 2.

Graphs for writes.

Results: MySQL from old to new

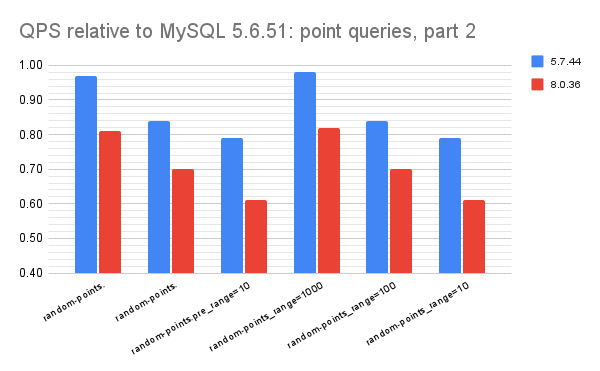

This section uses MySQL 5.6.51 as the base version and then compares that with MySQL versions 5.7.44 and 8.0.36. The goal is to determine how throughput (QPS) changes from older to newer releases.

These tables have summary statistics from ser4 and ser7 of the relative throughput for MySQL 8.0.36 vs 5.6.51 for each of the microbenchmark groups. A value greater than one means the throughput for MySQL 8.0.36 is better than for 5.6.51. From the results here, new MySQL (8.0.36) gets between 56% and 89% of the throughput relative to old MySQL (5.6.51) using the median relative throughput per microbenchmark group. New features in modern MySQL come at the cost of much CPU overhead.

| from ser4 | point, part 1 | point, part 2 | range, part 1 | range, part 2 | writes |

| average | 0.72 | 0.71 | 0.66 | 0.81 | 0.61 |

| median | 0.70 | 0.70 | 0.65 | 0.76 | 0.56 |

| min | 0.67 | 0.61 | 0.63 | 0.66 | 0.44 |

| max | 0.78 | 0.82 | 0.72 | 1.00 | 0.99 |

| stddev | 0.04 | 0.09 | 0.03 | 0.15 | 0.16 |

| from ser7 | point, part 1 | point, part 2 | range, part 1 | range, part 2 | writes |

| average | 0.77 | 0.77 | 0.72 | 0.92 | 0.71 |

| median | 0.80 | 0.75 | 0.73 | 0.89 | 0.67 |

| min | 0.65 | 0.69 | 0.62 | 0.80 | 0.51 |

| max | 0.84 | 0.86 | 0.77 | 1.07 | 1.10 |

| stddev | 0.05 | 0.07 | 0.05 | 0.12 | 0.18 |

There are two graphs per microbenchmark group - first for ser4 and second for ser7. The y-axis doesn't begin at zero to improve readability. The y-axis starts at 0.40 for ser4 and 0.50 for ser7.

Graphs for point queries, part 2.

Graphs for range queries, part 1.

Graphs for range queries, part 2.

Graphs for writes.

Results: MariaDB vs MySQL

This section uses MySQL 8.0.36 as the base version and then compares that with MariaDB 11.4.1. The goal is to determine which DBMS gets more throughput (or uses less CPU/query).

These tables have summary statistics from ser4 and ser7 of the relative throughput for MariaDB 11.4.1 vs MySQL 8.0.36 for each of the microbenchmark groups. A value greater than one means the throughput for MariaDB 11.4.1 is better than for MySQL 8.0.36. From the results here, modern MariaDB (11.4.1) gets between 113% and 136% of the throughput relative to modern MySQL (8.0.36) using the median relative throughput per microbenchmark group. Modern MariaDB is faster than modern MySQL (on this setup) because MySQL has more performance regressions over time.

| from ser4 | point, part 1 | point, part 2 | range, part 1 | range, part 2 | writes |

| average | 1.17 | 1.21 | 1.21 | 1.19 | 1.31 |

| median | 1.15 | 1.22 | 1.21 | 1.17 | 1.36 |

| min | 1.12 | 1.19 | 1.15 | 1.16 | 1.11 |

| max | 1.30 | 1.24 | 1.37 | 1.25 | 1.48 |

| stddev | 0.05 | 0.02 | 0.07 | 0.04 | 0.12 |

| from ser7 | point, part 1 | point, part 2 | range, part 1 | range, part 2 | writes |

| average | 1.16 | 1.20 | 1.18 | 1.17 | 1.23 |

| median | 1.13 | 1.20 | 1.15 | 1.15 | 1.28 |

| min | 1.10 | 1.16 | 1.07 | 1.10 | 0.96 |

| max | 1.32 | 1.24 | 1.40 | 1.26 | 1.41 |

| stddev | 0.06 | 0.03 | 0.10 | 0.07 | 0.14 |

There are two graphs per microbenchmark group - first for ser4 and second for ser7. The y-axis doesn't begin at zero to improve readability. The y-axis starts at 1.00 for both ser4 and ser7.

Graphs for point queries, part 2.

Graphs for range queries, part 1.

Graphs for range queries, part 2.

Graphs for writes. The bar for update-index_range=1000 isn't visible on the second graph because the relative throughput is 0.96 but the y-axis starts at 1.00.

Results: change buffer

The InnoDB change buffer was removed from MariaDB 11.4. That feature has been good to me during the Insert Benchmark so I repeated tests in MariaDB 10.11.7 with it enabled and disabled. For the sysbench microbenchmarks and this workload (low concurrency, cached database) there is no benefit from it. It might have a benefit for some sysbench microbenchmarks when the database isn't cached.

This post is already too long so I won't provide charts but the raw data is here

for ser4 and

for ser7.

Results: disk read mystery

The database is cached by InnoDB for these tests but the sum of the sizes of the InnoDB buffer pool and InnoDB redo logs are larger than memory. And that is a normal (best practice) configuration. Unfortunately, starting in MariaDB 10.11 there is read IO during some of the microbenchmark steps. All of the configs use either O_DIRECT or O_DIRECT_NO_FSYNC for InnoDB flush method and I confirmed that database file reads aren't being done based on the output of SHOW ENGINE INNODB STATUS.

What might be the source of the reads? My first guess is read-modify-write (RMW) from the InnoDB redo log. The problem is that the redo log uses buffered IO (not O_DIRECT), redo log writes are done as a multiple of 512 bytes and writing to the first 512 bytes of a 4kb filesystem page will do a storage read if that 4kb page isn't in the OS page cache. Note that

innodb_log_write_ahead_size in MySQL 8 reduces the chance of this happening. It was my first guess because we suffered from it long ago with web-scale MySQL.

This happens on the ser4 server but not the ser7 server. The ser7 server has twice as much RAM but uses the same size tables, so there is more spare RAM and perhaps that makes it easier for Linux to cache the InnoDB redo log.

I ran additional tests where the InnoDB redo log and InnoDB data files use different storage devices I see that all of the read IO occurs on the storage device for the redo log so my guess was correct but why this occurs for modern MariaDB but not for MySQL remains a mystery.

I also looked at strace output from MariaDB and MySQL. I didn't see anything different. Although the redo log architecture has changed in MariaDB. It now uses just one redo log file while MySQL uses as many as you configure and the my.cnf files I used are here for

the ser4 and the

ser7 servers.

The unexpected storage reads might not hurt performance here because I use innodb_flush_log_at_trx_commit =2 (no sync on commit) and user transactions might not have to wait for that read on commit.

I have a script that provides iostat and vmstat metrics normalized by throughput (operations/s) to show how much hardware is used per request. And the problem is visible here for several microbenchmark steps including update-inlist.range100.pk1 and update-index.range100,pk1. The columns in the output are:

- cpu/o - CPU per operation (from vmstat us + sy)

- cs/o - context switches per operation (from vmstat cs)

- r/o - storage reads per operation (from iostat r/s)

- rKB/o - storage KB read per operation (from iostat rKB/s)

- wKB/o - storage KB written per operation (from iostat wKB/s)

- o/s - throughput, QPS, operations/s

In the tables below the value for r/o is 0 prior to MariaDB 10.11.7 (ma101107_rel) and then is 0.077 for MariaDB 10.11.7 and 0.034 for MariaDB 11.4.1. While I don't show it here, this problem also occurs for MySQL 5.6.51 but not for MySQL 5.7.44 or 8.0.36.

The data from the tables below is here

for ser4 and

for ser7.

sb.met.update-inlist.range100.pk1.dop1

--- absolute

cpu/o cs/o r/o rKB/o wKB/o o/s dbms

0.006937 36.076 0 0 90.665 3966 x.ma100244_rel.z11a_bee.pk1

0.007467 35.345 0 0 89.534 3845 x.ma100339_rel.z11a_bee.pk1

0.007336 35.395 0 0 91.588 3722 x.ma100433_rel.z11a_bee.pk1

0.005755 37.579 0 0 7.408 4126 x.ma100524_rel.z11a_bee.pk1

0.004227 8.442 0 0 33.077 3890 x.ma100617_rel.z11a_bee.pk1

0.004253 8.595 0.077 0.306 33.099 3856 x.ma101107_rel.z11a_bee.pk1

0.004430 8.462 0.034 0.136 32.22 3650 x.ma110401_rel.z11b_bee.pk1

--- relative to first result

1.08 0.98 1 1 0.99 0.97 x.ma100339_rel.z11a_bee.pk1

1.06 0.98 1 1 1.01 0.94 x.ma100433_rel.z11a_bee.pk1

0.83 1.04 1 1 0.08 1.04 x.ma100524_rel.z11a_bee.pk1

0.61 0.23 1 1 0.36 0.98 x.ma100617_rel.z11a_bee.pk1

0.61 0.24 inf inf 0.37 0.97 x.ma101107_rel.z11a_bee.pk1

0.64 0.23 inf inf 0.36 0.92 x.ma110401_rel.z11b_bee.pk1

sb.met.update-index.range100.pk1.dop1

--- absolute

cpu/o cs/o r/o rKB/o wKB/o o/s dbms

0.015003 34.740 0 0 110.865 2268 x.ma100244_rel.z11a_bee.pk1

0.016460 39.791 0 0 123.805 1715 x.ma100339_rel.z11a_bee.pk1

0.016282 39.626 0 0 124.773 1734 x.ma100433_rel.z11a_bee.pk1

0.010618 55.358 0 0 111.238 2277 x.ma100524_rel.z11a_bee.pk1

0.007834 12.873 0 0 53.445 1997 x.ma100617_rel.z11a_bee.pk1

0.006468 14.170 0.857 3.426 50.978 2453 x.ma101107_rel.z11a_bee.pk1

0.006733 14.250 0.859 3.434 51.687 2337 x.ma110401_rel.z11b_bee.pk1

--- relative to first result

1.10 1.15 1 1 1.12 0.76 x.ma100339_rel.z11a_bee.pk1

1.09 1.14 1 1 1.13 0.76 x.ma100433_rel.z11a_bee.pk1

0.71 1.59 1 1 1.00 1.00 x.ma100524_rel.z11a_bee.pk1

0.52 0.37 1 1 0.48 0.88 x.ma100617_rel.z11a_bee.pk1

0.43 0.41 inf inf 0.46 1.08 x.ma101107_rel.z11a_bee.pk1

0.45 0.41 inf inf 0.47 1.03 x.ma110401_rel.z11b_bee.pk1

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

No comments:

Post a Comment