This has results from the db_bench benchmarks for RocksDB on both a small and medium server using a database that is cached by RocksDB. Previous posts are here and here with results for RocksDB 6.0 to 9.3. This has results up to version 9.6.

tl;dr

- Bug 12038 arrived in RocksDB 8.6 and has yet to be fixed, but a fix arrives soon. This effects results on servers when the workload is IO-bound, buffered IO is used (no O_DIRET) and max_sectors_kb for storage is smaller than 1MB.

- The worst-case regression from 6.0 to 9.6 is ~20% but in most cases it is <= 10% and in a few cases I get more throughput in 9.6.

- Were I to begin using the hyperclock cache then results from modern RocksDB would be much better

Hardware

I tested on two servers:

- Small server

- SER7 - Beelink SER7 7840HS (see here) with 8 cores, AMD SMT disabled, a Ryzen 7 7840HS CPU, Ubuntu 22.04, XFS and 1 NVMe device. The storage device has 128 for max_hw_sectors_kb and max_sectors_kb.

- Medium server

- C2D - a c2d-highcpu-32 instance type on GCP (c2d high-CPU) with 32 vCPU and 16 cores, AMD SMT disabled, XFS, SW RAID 0 and 4 NVMe devices. The RAID device has max_hw_sectors_kb =512 and max_sectors_kb =512 while the storage devices have max_hw_sectors_kb =2048 and max_sectors_kb =1280.

Builds

I compiled db_bench from source on all servers. I used versions 6.0.2, 6.10.4, 6.20.4, 6.29.5, 7.0.4, 7.3.2, 7.6.0, 7.10.2, 8.0.0, 8.1.1, 8.2.1, 8.3.3, 8.4.4, 8.5.4, 8.6.7, 8.7.3, 8.8.1, 8.9.2, 8.10.2, 8.11.4, 9.0.1, 9.1.2, 9.2.2, 9.3.2, 9.4.1, 9.5.2 and 9.6.1.

Benchmark

All tests used the LRU block cache and the default value for compaction_readahead_size. Soon I will switch to the hyper clock cache.

I used my fork of the RocksDB benchmark scripts that are wrappers to run db_bench. These run db_bench tests in a special sequence -- load in key order, read-only, do some overwrites, read-write and then write-only. The benchmark was run using 1 thread for the small server and 8 threads for the medium server. How I do benchmarks for RocksDB is explained here and here. The command lines to run them are:

# Small server, SER7: use 1 thread, 20M KV pairs for cached, 400M for IO-bound

bash x3.sh 1 no 3600 c8r32 20000000 400000000 byrx iobuf iodir

# Medium server, C2D: use 8 threads, 40M KV pairs for cached, 2B for IO-bound

bash x3.sh 8 no 3600 c16r64 40000000 2000000000 byrx iobuf iodir

Workloads

There are three workloads and this blog post has results for byrx:

- byrx - the database is cached by RocksDB

- iobuf - the database is larger than memory and RocksDB uses buffered IO

- iodir - the database is larger than memory and RocksDB uses O_DIRECT

A spreadsheet with all results is here and performance summaries with more details are linked below:

- leveled compaction - for the small server and the medium server

- tiered compaction - for the small server and the medium server

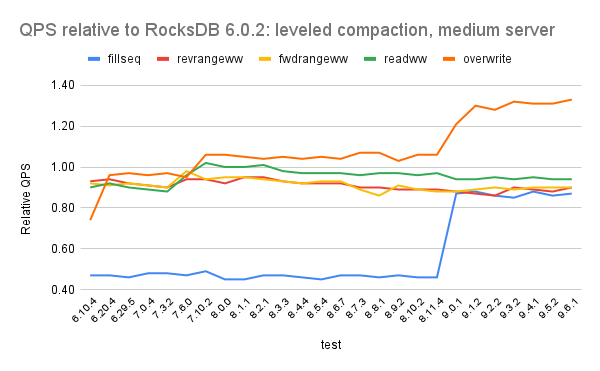

Relative QPS

The numbers in the spreadsheet and on the y-axis in the charts that follow are the relative QPS which is (QPS for $me) / (QPS for $base). When the value is greater than 1.0 then $me is faster than $base. When it is less than 1.0 then $base is faster (perf regression!).

Results

The base case is RocksDB 6.0.2.

To improve readability:

- The y-axis starts at 0.4

- The charts don't show results for all versions tested. Results for all versions are in the performance summary URLs above in the Workloads section.

The tests on the charts are:

- fillseq -- load in key order with the WAL disabled

- revrangeww -- reverse range while writing, do short reverse range scans as fast as possible while another thread does writes (Put) at a fixed rate

- fwdrangeww -- like revrangeww except do short forward range scans

- readww - like revrangeww except do point queries

Leveled compaction, small server

- All tests except overwrite are ~10% slower in 9.6 vs 6.0

- Overwrite was slower after 6.0 then slowly got faster and 9.6 is as fast as 6.0

- Each test ran for 3600s prior to 9.0 and 1800s for 9.x versions. That was a mistake by me.

- Overwrite has the most interesting behavior (much variance) that I can't explain today. One good thing is that the write stall percentage (stall%) decreased significantly in 9.x while write-amp (w_amp) increased slightly (see here) but I don't know how much of that is from the shorter run time (1800s vs 3600s).

- In most of the tests the user CPU (u_cpu) and system CPU (s_cpu) time in RocksDB 9.x is about half the value prior to 9.x. Normally that is good news but the root cause here is that I only ran the 9.x tests for 1800s vs 3006s prior to 9.x.

Leveled compaction, medium server

- All tests except overwrite are ~10% slower in 9.6 vs 6.0

- Overwrite was slower after 6.0 then slowly got faster and 9.6 is ~1.3X faster than 6.0

- Results for fillseq are interesting because 6.0 did great making the comparisons harder for versions that follow. I don't recall what changed. But things improved a lot in 9.x. From the performance summary (see here) the value for compaction wall clock seconds (c_wsecs) increases after 6.0 and then decreases during 9.x. Also the write stall percentage (stall%) was ~50% prior to 9.0 and then drops to 0 in 9.x. So something changed with respect to how IO is done, but write-amplification (w_amp) didn't change so the amount of data written is the same.

- Results for overwrite show a large improvement during 9.x. There are reductions in write-stalls (stall%) and worst-case response time (pmax) during 9.x (see here).

Tiered compaction, small server

- Relative to RocksDB 6.0, in RocksDB 9.6 ...

- fillseq is ~4% slower

- revrangeww, fwdrangeww and overwrite are ~10% slower

- readww is ~20% slower

- In most of the tests the user CPU (u_cpu) and system CPU (s_cpu) time in RocksDB 9.x is about half the value prior to 9.x (see here). Normally that is good news but the root cause here is that I only ran the 9.x tests for 1800s vs 3006s prior to 9.x.

Tiered compaction, medium server

No comments:

Post a Comment