Yes we have no (or few) regressions seems to be as true for RocksDB as it is for Postgres. This isn't a big surprise for me but I am happy to document the result of so many other people working hard.

tl;dr

- There are no, or few, regression in RocksDB from version 7.3.2 to 8.7.0. For most of the tests the QPS stays constant from 7.3.2 to 8.7.0.

- Throughput for fillseq (insert in key order) improved by ~2X

- Throughput for overwrite improved by ~1.2X

- There is too much variance for fwdrange. The workaround is to use Hyper Clock.

For these tests I must use a special compiler toolchain and wasn't able to compile RocksDB 7.3.0, 7.3.1 or 7.3.2. For that reason I didn't even consider compiling RocksDB 6.x, 5.x or 4.x versions.

All tests used a server with 40 cores, 80 HW threads, 2 sockets, 256GB of RAM and many TB of fast NVMe SSD with Linux 5.1.2, XFS and SW RAID 0 across 6 devices.

I used my fork of the RocksDB benchmark scripts that are wrappers to run db_bench. These run db_bench tests in a special sequence -- load in key order, read-only, do some overwrites, read-write and then write-only. The benchmark was repeated using 12, 24 and 48 client threads. At 12 the CPU is undersubscribed and at 48 it can be oversubscribed depending on IO latency. How I do benchmarks for RocksDB is explained here and here.

- cached - database fits in the RocksDB block cache

- iobuf - IO-bound, working set doesn't fit in memory, uses buffered IO

- iodir - IO-bound, working set doesn't fit in memory, uses O_DIRECT

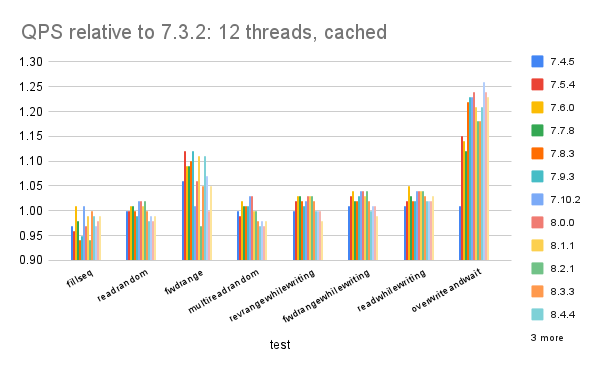

Results: 12 threads

The improve readability the y-axis starts at 0.9 and stops at 1.30. Some results are larger than 1.30 and will be truncated. A spreadsheet with all results is here. The charts show the QPS for a given release relative to the QPS for RocksDB 7.3.2 using: (QPS for $version) / (QPS for RocksDB 7.3.2). A value larger than 1 means that the version gets more QPS than RocksDB 7.3.2. A value equal to 1 means they get similar QPS.

Summary:

- There is a big improvement for IO-bound fillseq and overwrite

- There is too much variance for fwdrange

- Otherwise modern RocksDB is >= older RocksDB

Results: 24 threads

The improve readability the y-axis starts at 0.9 and stops at 1.30. Some results are larger than 1.30 and will be truncated. A spreadsheet with all results is here. The charts show the QPS for a given release relative to the QPS for RocksDB 7.3.2 using: (QPS for $version) / (QPS for RocksDB 7.3.2). A value larger than 1 means that the version gets more QPS than RocksDB 7.3.2. A value equal to 1 means they get similar QPS.

Summary:

- There is a big improvement for IO-bound fillseq and overwrite

- There is too much variance for fwdrange

- Otherwise modern RocksDB is >= older RocksDB

Results: 48 threads

- There is a big improvement for IO-bound fillseq and overwrite

- There is too much variance for fwdrange

- Otherwise modern RocksDB is >= older RocksDB

No comments:

Post a Comment