This post is about variance that occurs in some of the RocksDB benchmark tests. I run tests on small, medium and large servers and the problems only occur on the large server. The problems also only occur on benchmarks that use range queries. I spend much time looking for performance regressions and variance makes it harder to spot them.

While I have been aware of this issue for more than a year, I ignored it until recently. While I have yet to explain the root cause, the workaround is simple -- use Hyper Clock rather than LRU for the block cache. See my previous post that hypes the Hyper Clock cache.

tl;dr

- with the LRU block cache the QPS can vary by ~2X for read-only range query tests

- use Hyper Clock cache instead of LRU for the block cache to avoid variance

- the QPS from Hyper Clock for read-only range query tests can be 2X to 3X larger than for the LRU block cache

- the signal that this might be a problem is too much system CPU time

- pinning threads to one socket improves throughput when using the LRU cache but does not avoid the variance for range queries on read-only tests

Updates

Aggregated thread stacks from PMP are here for the fwdrange test with

24 threads and

48 threads. These are computed over ~10 call-stack samples. From the call stacks there isn't a significant difference between the runs where the result was bad (too much system CPU time, too much mutex contention) and where the result was good. But it is clear there is a lot of mutex contention.

A hypothesis

My theory at this point is that there is non-determinism in how the block cache is populated and the block cache ends up in one of two states - bad and good. The benchmarks are read-only and the block cache is large enough to cache the entire database. So once the block cache gets into one state (bad or good) it remains in that state. This is a risk of a read-only benchmark. If there were some writes, then the state of the LSM tree and in-memory structures would change over time and not get stuck.

Alas, this is just a theory today.

Hardware

I use three server types that I call small, medium and large:

- small - 8 cores, 16G RAM, a Beelink (see here)

- medium - 15 cores with hyperthreads disabled, c2-standard-30 from Google (GCP)

- large - 2 sockets, 80 HW threads, 40 cores with hyperthreads enabled

Benchmark

I used the RocksDB benchmarks scripts and my usage is explained

here and

here. The benchmark client is named db_bench. The benchmark runs a sequence of tests in a specific order:

- initial load

- read-only tests for point and range queries

- write-only tests to fragment the LSM tree (results are ignored)

- read-write tests

- write-only tests

For this post I stopped the benchmark after step 2 because the variance occurs during the read-only tests. A spreadsheet with all results

is here. I focus on results for the fwdrange and readrandom tests. Both are read-only, fwdrange does range queries and readrandom does point queries.

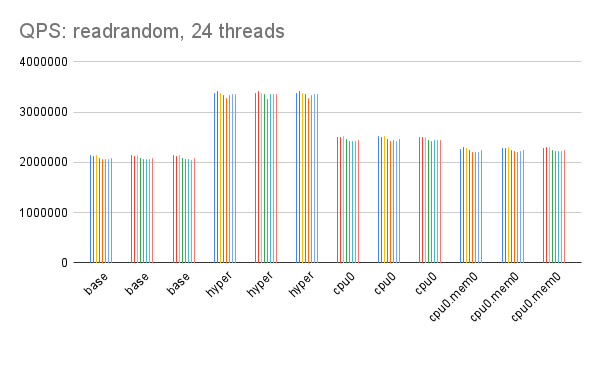

The benchmark was repeated for 12, 24 and 48 threads. For each number of threads it was repeated 3 times thus the graphs below have 3 clusters of bars for base, 3 for hyper, 3 for cpu0 and 3 for cpu0.mem0.. And then each run was done for RocksDB 8.0, 8.1, 8.2, 8.3, 8.4, 8.5, and 8.6. So in the graphs below each clusters of 7 bars has results for RocksDB 8.0 to 8.6.

The benchmark was run by the following. It loads 100M KV pairs and the database is ~40G. The RocksDB block cache is large enough to cache the database.

dop=$1

secs=600

comp=clang

for i in $( seq 1 3 ); do

bash x3.sh $dop no $secs c40r256bc180 100000000 4000000000 byrx

mv res.byrx res.byrx.t${dop}.${secs}s.$comp.$i

done

Configurations

I tested four configurations. From the results below, while pinning threads to one sockets improves performance the only way to avoid the variance is to use the Hyper Clock cache.

- base

- use the LRU block cache and numactl --interleave=all

- hyper

- use the Hyper Clock block cache and numactl --interleave=all

- cpu0

- use the LRU block cache and numactl --cpunodebind=0 --interleave=all to pin all threads to one socket but allocate memory from both sockets

- cpu0.mem0

- use the LRU block cache and numactl --cpunodebind=0 --localalloc to pin all threads to one socket and allocate memory from that socket

Range queries: QPS

The graphs show the average QPS for fwdrange tests (read-only, point queries) using 12, 24 and 48 threads.

- At 12 threads the variance is best for hyper, not bad for cpu0 and cpu0.mem0 and worst for base

- At 24 and 48 threads the variance is best for hyper, bad for cpu0 and cpu0.mem0 and lousy for base

- For base the QPS increases from 12 to 24 threads, but not from 24 to 48. See the spreadsheet.

- For hyper the QPS increases from 12 to 24 to 48 threads

- The QPS from hyper is up to 3X larger than from base

Range queries: system vs user CPU time

The graphs here have the ratio: system CPU time / user CPU time for the fwdrange tests (read-only, range queries). Based on the graphs, I will guess that the problem is mutex contention because the ratio is large for the LRU block cache (base, cpu0, cpu0.mem) and small for the Hyper Clock cache (hyper).

It is also interesting that the ratio for the LRU block cache is smaller when all threads are pinned to one socket (cpu0, cpu0.mem) vs not pinning them (base).

Range queries: vmstat

This adds some output form vmstat from fwdrange with 24 threads for the base configuration that uses the LRU block cache. The good result gets 456762 QPS from RocksDB 8.0 and the bad result gets 315960 QPS from RocksDB 8.5. Note that the same RocksDB can get good and bad results.

- The CPU utilization (us + sy columns) is similar but the sy/us ratio is much larger in the bad result

- The average context switch rate is similar (cs column)

- Given that the QPS with the good result is ~1.45X larger than with the bad result, the amount of CPU/query and number of context switches / query are much larger in the bad result.

The good result

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

25 0 0 58823196 406932 154263328 0 0 0 36 221215 848850 22 7 71 0 0

24 0 0 58822520 406932 154263328 0 0 0 340 221711 847153 23 7 71 0 0

23 0 0 58824152 406940 154263392 0 0 0 352 231440 854547 24 7 70 0 0

23 0 0 58821568 406940 154263424 0 0 0 44 224095 847201 23 7 71 0 0

24 0 0 58822080 406940 154263472 0 0 0 4 221711 845231 22 7 71 0 0

25 0 0 58823344 406940 154263456 0 0 0 156 220700 842168 22 7 71 0 0

24 0 0 58823148 406948 154263552 0 0 0 364 221957 841721 23 7 71 0 0

21 0 0 58826844 406948 154263600 0 0 0 288 220392 842787 23 6 71 0 0

24 0 0 58824240 406948 154263648 0 0 0 112 223243 845933 23 7 71 0 0

25 0 0 58805068 406948 154263680 0 0 0 4 222477 842962 23 6 71 0 0

The bad result

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

23 0 0 55562296 444928 156854032 0 0 0 4 174564 836501 18 12 70 0 0

23 0 0 55503180 444928 156854224 0 0 0 4 175928 852824 18 13 70 0 0

23 0 0 55595540 444928 156854176 0 0 0 60 175285 833147 18 13 70 0 0

26 0 0 55518432 444928 156854432 0 0 0 52 174733 851865 18 13 70 0 0

27 0 0 55743232 444940 156854464 0 0 0 296 175891 834044 18 13 70 0 0

26 0 0 55724756 444940 156854624 0 0 0 60 174582 828127 18 13 70 0 0

24 0 0 55741960 444940 156854624 0 0 0 4 173324 828154 18 12 70 0 0

24 0 0 55722464 444940 156854768 0 0 0 100 172768 824844 18 12 70 0 0

25 0 0 55718576 444940 156853888 0 0 0 416 173330 828159 18 12 70 0 0

23 0 0 55692152 444948 156860352 0 0 0 2136 176929 832113 18 12 70 0 0

Point queries: QPS

This shows the average QPS for the readrandom test (read-only, point queries). Variance is not a problem.

No comments:

Post a Comment