I spend much time documenting how MySQL performance has changed over the years. After my latest round of benchmarks I looked at flamegraphs from MySQL/InnoDB during the insert benchmark. Unfortunately, I didn't see anything obvious when comparing flamegraphs for MySQL 5.6, 5.7 and 8.0. Mostly, the flamegraphs looked the same -- the percentage of time in various call stacks was similar, and the call stacks were similar.

So the flamegraphs look similar, but MySQL 8.0 gets less work done per second. A possible explanation is that everything gets slower, perhaps from more cache misses, so I used Linux perf to debug this and the results are interesting.

What happened in MySQL 5.7 and 8.0 to put so much more stress on the memory system?

tl;dr

- It looks like someone sprinkled magic go slower dust across most of the MySQL code because the slowdown from MySQL 5.6 to 8.0 is not isolated to a few call stacks.

- MySQL 8.0 uses ~1.5X more instructions/operation than 5.6. Cache activity (references, loads, misses are frequently up 1.5X or more. TLB activity (loads, misses) is frequently up 2X to 5X with the iTLB being a bigger problem than the dTLB.

- innodb_log_writer_threads=ON is worse than I thought. I will soon have a post on that.

Too long to be a tl;dr

- I don't have much experience using Linux perf counters to explain performance changes.

- There are huge increases for data TLB, instruction TLB and L1 cache counters. From the Xeon CPU (socket2 below) the change from MySQL 5.6.21 to 8.0.34 measured as events/query are:

- branches, branch misses: up ~1.8X, ~1.5X

- cache references: up ~1.4X

- instructions: up ~1.8X

- dTLB loads, load-misses, stores, store-misses: up ~2.0X, ~4.7X, ~2.1X, ~3.5X

- iTLB loads, load-misses: up ~6.5X, ~5.0X

- L1 data cache loads, load-misses, stores: up ~2.0X, ~2.0X, ~2.1X

- L1 instruction cache load-misses: up ~2.8X

- LLC loads, load-misses, stores, store-misses: up ~1.3X, ~1.1X, ~1.1X, ~1.1X and it is interesting that the growth here is much less than the other counters

- Context switches, CPU migrations: up ~1.9X, ~7.9X

- Two open questions

- Does the increase in context switches and CPU migrations explains the rise in the cache and TLB counters?

- Is this change caused by the use of innodb_log_writer_threads=ON, which I set for the big server (socket2) but not for the small servers (beelink, ser7).

- For many of the HW counters the biggest jumps occur between the last point release in 5.6 and the first in 5.7 and then again between the last in 5.7 and the first in 8.0. Perhaps this is good news because it means the problems are not spread across every point release.

The posts in this series are:

- 5.6 - 5.6.21, 5.6.31, 5.6.41, 5.6.51. Note that 5.6.51 is the last release for 5.6.

- 5.7 - 5.7.10, 5.7.20, 5.7.30, 5.7.43. Note that 5.7.43 is the next-to-last release for 5.7 and 5.7.10 is the first.

- 8.0 - 8.0.14, 8.0.20, 8.0.28, 8.0.34. Note that 8.0.13 was the first GA for 8.0.

- beelink - the old server from Beelink explained here that has an AMD 4700u CPU with 8 cores, 16G RAM and 1TB of NVMe SSD with XFS and Ubuntu 22.04

- ser7 - the new server from Beelink (SER7 7840HS) that has an AMD 7840HS CPU with 8 cores, 32G RAM and 1TB of NVMe SSD with XFS and Ubuntu 22.04

- socket2 - my new 2-socket server with 2 Intel Xeon Silver CPU @ 2.40GHz, 64G RAM and 1TB of NVMe SSD with XFS and Ubuntu 22.04

The benchmark is run with 12 clients/tables for the socket2 server and 1 client/table for the others. Each client uses a separate table. The benchmark is a sequence of steps and I often call each of these a benchmark step.

- l.i0

- insert 20 million rows per table

- l.x

- create 3 secondary indexes. I usually ignore performance from this step.

- l.i1

- insert and delete another 20 million rows per table with secondary index maintenance. The number of rows/table at the end of the benchmark step matches the number at the start with inserts done to the table head and the deletes done from the tail.

- q100, q500, q1000

- do queries as fast as possible with 100, 500 and 1000 inserts/s/client and the same rate for deletes/s done in the background. Run for 1200 seconds.

Perf

I modified my benchmark helper scripts to run Linux perf and collect stats over 10 second intervals. One loop looks like the following and there was a sleep for 30+ seconds after each iteration of the loop. The data I share here is from the second-to-last sample collected per benchmark step.

perf stat -e cache-references,cache-misses,branches,branch-misses -p $pid -- sleep 10 ; sleep 2

perf stat -e L1-dcache-loads,L1-dcache-load-misses,L1-dcache-stores,L1-icache-loads-misses -p $pid -- sleep 10 ; sleep 2

perf stat -e dTLB-loads,dTLB-load-misses,dTLB-stores,dTLB-store-misses,dTLB-prefetch-misses -p $pid -- sleep 10 ; sleep 2

perf stat -e iTLB-load-misses,iTLB-loads -p $pid -- sleep 10 ; sleep 2

perf stat -e LLC-loads,LLC-load-misses,LLC-stores,LLC-store-misses,LLC-prefetches -p $pid -- sleep 10 ; sleep 2

perf stat -e alignment-faults,context-switches,migrations,major-faults,minor-faults,faults -p $pid -- sleep 10 ; sleep 2

Performance

The charts below show average throughput (QPS, or really operations/s) for the l.i0 benchmark step.

- The benchmark uses 1 client for the small servers (beelink, ser7) and 12 clients for the big server (socket2).

- MySQL gets slower from 5.6 to 8.0 on the small servers (beelink, ser7) but slightly faster on the big server (socket2). Perhaps performance is dominated by CPU overhead on the small servers, but improvements for concurrent workloads offsets the new CPU overhead on the big server.

Results

The results are split into four parts because there are so many charts -- there is one part per benchmark step, and I ignore the l.x and q500 steps to save time. This post focuses on the l.i0 step that loads the database.

The purpose for this post is to document MySQL performance in terms of HW counters collected by Linux perf. The challenge is that throughput (QPS or operations/s) isn't the same across the versions of MySQL that I tested. As explained in the Perf section above I collected perf output over a 10-second interval. But the amount of work done (QPS or operations/s) in that interval was not constant. So rather than just graph the values of the HW counters, I graph:

($counter/query for my version) / ($counter/query for MySQL 5.6.21)

- cache-misses is 1M for MySQL 5.6.21 and 2M for 8.0.34, per 10-second interval

- QPS is 10,000 for MySQL 5.6.21 and 5,000 for 8.0.34

(2M / 5000) / (1M / 10000) = 4

Were performance unchanged from MySQL 5.6.21 to 8.0.34 then I would expect the values of relative counter/query to be 1, but they tend to be a bit larger than 1 as shown here.

One things

- the y-axis frequently does not start at zero to improve readability. But this also makes it harder to compare adjacent graphs

- below when I write up 30% that is the same as up 1.3X. I switch from the former to the latter when the increase is larger than 99%.

- CPI isn't scaled using the formula above because it is a rate, instead the charts show (CPI for my version) / (CPI for MySQL 5.6.21)

The HW counter names used in the charts are the ones from perf output.

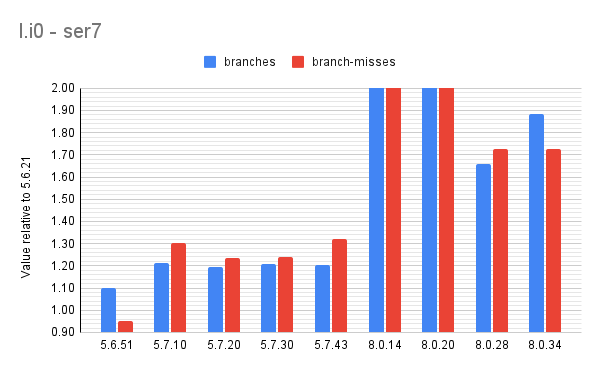

Results: branches and branch misses

Summary:

- the Results section above explains the y-axis

- 8.0.14 and 8.0.20 are frequent outliers that I ignore

- on beelink

- from 5.6.51 to 5.7.10 branches up 13%, branch misses up 21%

- from 5.7.43 to 8.0.34 branches up 50%, branch misses up 47%

- on ser7

- from 5.6.51 to 5.7.10 branches up 10%, branch misses up 37%

- from 5.7.43 to 8.0.34 branches up 55%, branch misses up 31%

- on socket2

- from 5.6.51 to 5.7.10 branches up 7%, branch misses up 2%

- from 5.7.43 to 8.0.34 branches up 49%, branch misses up 33%

Summary:

- the Results section above explains the y-axis

- on beelink

- from 5.6.51 to 5.7.10 references up 45%, misses up 19%

- from 5.7.43 to 8.0.34 references up 48%, misses up 85%

- on ser7

- from 5.6.51 to 5.7.10 references up 60%, misses up 43%

- from 5.7.43 to 8.0.34 references up 58%, misses up 57%

- on socket2

- from 5.6.51 to 5.7.10 references up 22%, misses up 23%

- from 5.7.43 to 8.0.34 references up 18%, misses down 8%

Summary:

- the Results section above explains the y-axis

- on beelink

- from 5.6.51 to 5.7.10 cycles up 17%, instructions up 14%, cpi up 2%

- from 5.7.43 to 8.0.34 cycles up 59%, instructions up 48%, cpi up 8%

- on ser7

- from 5.6.51 to 5.7.10 cycles up 20%, instructions up 14%, cpi up 6%

- from 5.7.43 to 8.0.34 cycles up 49%, instructions up 49%, cpi is flat

- on socket2

- from 5.6.51 to 5.7.10 cycles down 3%, instructions up 11%, cpi down 13%

- from 5.7.43 to 8.0.34 cycles up 1%, instructions up 51%, cpi down 34%

Summary:

- the Results section above explains the y-axis

- on beelink

- from 5.6.51 to 5.7.10 dTLB-loads up 53%, dTLB-load-misses up 2.4X

- from 5.7.43 to 8.0.34 dTLB-loads up 62%, dTLB-load-misses up 35%

- on ser7

- from 5.6.51 to 5.7.10 dTLB-loads up 61%, dTLB-load-misses up 51%

- from 5.7.43 to 8.0.34 dTLB-loads up 53%, dTLB-load-misses up 30%

- on socket2

- loads

- from 5.6.51 to 5.7.10 dTLB-loads up 18%, dTLB-load-misses up 33%

- from 5.7.43 to 8.0.34 dTLB-loads up 53%, dTLB-load-misses up 2.7X

- stores

- from 5.6.51 to 5.7.10 dTLB-stores up 26%, dTLB-store-misses down 1%

- from 5.7.43 to 8.0.34 dTLB-stores up 55%, dTLB-store-misses up 4.5X

Summary:

- the Results section above explains the y-axis

- on beelink

- from 5.6.51 to 5.7.10 loads up 44%, load-misses up 86%

- from 5.7.43 to 8.0.34 loads up 42%, load-misses up 2.7X

- on ser7

- from 5.6.51 to 5.7.10 loads up 69%, load-misses up 3.1X

- from 5.7.43 to 8.0.34 loads up 23%, load-misses up 3.1X

- on socket2

- from 5.6.51 to 5.7.10 loads up 2.7X, load-misses up 54%

- from 5.7.43 to 8.0.34 loads up 59%, load-misses up 2.7X

- the Results section above explains the y-axis

- on beelink

- dcache

- from 5.6.51 to 5.7.10 loads up 23%, load-misses up 50%

- from 5.7.43 to 8.0.34 loads up 45%, load-misses up 39%

- icache

- from 5.6.51 to 5.7.10 loads-misses down 3%

- from 5.7.43 to 8.0.34 loads-misses up 56%

- on ser7

- dcache

- from 5.6.51 to 5.7.10 loads up 24%, load-misses up 51%

- from 5.7.43 to 8.0.34 loads up 43%, load-misses up 38%

- icache

- from 5.6.51 to 5.7.10 loads-misses up 2.1X

- from 5.7.43 to 8.0.34 loads-misses up 70%

- on socket2

- dcache

- from 5.6.51 to 5.7.10 loads up 21%, load-misses up 38%, stores up 28%

- from 5.7.43 to 8.0.34 loads up 54%, load-misses up 34%, stores up 55%

- icache

- from 5.6.51 to 5.7.10 loads-misses up 47%

- from 5.7.43 to 8.0.34 loads-misses up 71%

The LLC counters were only supported in the socket2 CPU.

Summary:

- the Results section above explains the y-axis

- on socket2

- loads

- from 5.6.51 to 5.7.10 loads up 18%, loads-misses up 13%

- from 5.7.43 to 8.0.34 loads up 11%, loads-misses up 1%

- stores

- from 5.6.51 to 5.7.10 loads down 6%, loads-misses down 8%

- from 5.7.43 to 8.0.34 loads up 1%, loads-misses down 6%

- on beelink

- from 5.6.21 to 5.7.10 context switches down 37%

- from 5.7.43 to 8.0.34 context switches up 87%

- on ser7

- from 5.6.21 to 5.7.10 context switches down 35%

- from 5.7.43 to 8.0.34 context switches up 86%

- on socket2

- from 5.6.21 to 5.7.10 context switches down 36%

- from 5.7.43 to 8.0.34 context switches up 2.5X

- on beelink

- from 5.6.21 to 5.7.10 CPU migrations up 13.7X

- from 5.7.43 to 8.0.34 CPU migrations up 3.6X

- on ser7

- from 5.6.21 to 5.7.10 CPU migrations up 7.3X

- from 5.7.43 to 8.0.34 CPU migrations up 3.9X

- on socket2

- from 5.6.21 to 5.7.10 CPU migrations up 2.7X

- from 5.7.43 to 8.0.34 CPU migrations up 2.5X

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

No comments:

Post a Comment