This is part 2 of my series on using HW counters from Linux perf to explain why MySQL gets slower from 5.6 to 8.0. Refer to part 1 for an overview.

What happened in MySQL 5.7 and 8.0 to put so much more stress on the memory system?

tl;dr

- It looks like someone sprinkled magic go slower dust across most of the MySQL code because the slowdown from MySQL 5.6 to 8.0 is not isolated to a few call stacks.

- MySQL 8.0 uses ~1.5X more instructions/operation than 5.6. Cache activity (references, loads, misses are frequently up 1.5X or more. TLB activity (loads, misses) is frequently up 2X to 5X with the iTLB being a bigger problem than the dTLB.

- innodb_log_writer_threads=ON is worse than I thought. I will soon have a post on that.

Too long to be a tl;dr

- I don't have much experience using Linux perf counters to explain performance changes.

- There are huge increases for data TLB, instruction TLB and L1 cache counters. From the Xeon CPU (socket2 below) the change from MySQL 5.6.21 to 8.0.34 measured as events/query are:

- branches: up ~1.4X

- instructions: up ~1.7X

- dTLB loads, load-misses, stores, store-misses: up ~1.7X, ~1.5X, ~1.9X, ~2.3X

- iTLB loads, load-misses: up ~5.0X, ~1.8X

- L1 data cache loads, load-misses, stores: up ~1.7X, ~1.7X, ~2.0X

- L1 instruction cache load-misses: up ~3.1X

- LLC loads, load-misses, stores, store-misses: up ~1.1X, ~1.1X, ~1.1X, ~1.2X and it is interesting that the growth here is much less than the other counters

- Context switches, CPU migrations are slightly down while they were up a lot for the l.i0 benchmark step. But the rates in 8.0.34 are still up a lot from 5.7.43: ~2.6X for context switches and ~1.5X for migrations.

- For many of the HW counters the biggest jumps occur between the last point release in 5.6 and the first in 5.7 and then again between the last in 5.7 and the first in 8.0. Perhaps this is good news because it means the problems are not spread across every point release.

Performance

The charts below show average throughput (QPS, or really operations/s) for the l.i0 benchmark step.

- The benchmark uses 1 client for the small servers (beelink, ser7) and 12 clients for the big server (socket2).

- From the Insert Benchmark steps when using a CPU-bound workload, this one is the best for modern MySQL relative to MySQL 5.6

Refer to the Results section in part 1 to understand what is displayed on the charts. The y-axis frequently does not start at zero to improve readability. But this also makes it harder to compare adjacent graphs.

Below when I write up 30% that is the same as up 1.3X. I switch from the former to the latter when the increase is larger than 99%.

Spreadsheets are here for beelink, ser7 and socket2. See the Servers section above to understand the HW for beelink, ser7 and socket2.

Results: branches and branch misses

Summary:

- the Results section above explains the y-axis

- on beelink

- from 5.6.51 to 5.7.10 branches up 2%, branch-misses down 14%

- from 5.7.43 to 8.0.34 branches up 30%, branch-misses up 28%

- on ser7

- from 5.6.51 to 5.7.10 branches down 2%, branch-misses up 4%

- from 5.7.43 to 8.0.34 branches up 33%, branch-misses up 35%

- on socket2

- from 5.6.51 to 5.7.10 branches up 10%, branch-misses down 8%

- from 5.7.43 to 8.0.34 branches up 31%, branch-misses up 44%

Summary:

- the Results section above explains the y-axis

- on beelink

- from 5.6.51 to 5.7.10 references up 48%, misses up 4%

- from 5.7.43 to 8.0.34 references up 53%, misses up 57%

- on ser7

- from 5.6.51 to 5.7.10 references up 42%, misses up 10%

- from 5.7.43 to 8.0.34 references up 60%, misses up 31%

- on socket2

- from 5.6.51 to 5.7.10 references up 16%, misses up 4%

- from 5.7.43 to 8.0.34 references up 42%, misses up 24%

Summary:

- the Results section above explains the y-axis

- on beelink

- from 5.6.51 to 5.7.10 cycles down 6%, instructions up 2%, cpi down 8%

- from 5.7.43 to 8.0.34 cycles up 29%, instructions up 31%, cpi down 2%

- on ser7

- from 5.6.51 to 5.7.10 cycles up 1%, instructions up 4%, cpi down 3%

- from 5.7.43 to 8.0.34 cycles up 20%, instructions up 25%, cpi down 4%

- on socket2

- from 5.6.51 to 5.7.10 cycles up 20%, instructions up 9%, cpi up 12%

- from 5.7.43 to 8.0.34 cycles down 36%, instructions up 43%, cpi down 56%

Summary:

- the Results section above explains the y-axis

- on beelink

- from 5.6.51 to 5.7.10 dTLB-loads up 61%, dTLB-load-misses up 5%

- from 5.7.43 to 8.0.34 dTLB-loads up 49%, dTLB-load-misses up 17%

- on ser7

- from 5.6.51 to 5.7.10 dTLB-loads up 91%, dTLB-load-misses up 6%

- from 5.7.43 to 8.0.34 dTLB-loads up 51%, dTLB-load-misses up 15%

- on socket2

- loads

- from 5.6.51 to 5.7.10 dTLB-loads up 23%, dTLB-load-misses up 18%

- from 5.7.43 to 8.0.34 dTLB-loads up 83%, dTLB-load-misses up 79%

- stores

- from 5.6.51 to 5.7.10 dTLB-stores up 26%, dTLB-store-misses up 1%

- from 5.7.43 to 8.0.34 dTLB-stores up 2.1X, dTLB-store-misses up 4.3X

Summary:

- the Results section above explains the y-axis

- on beelink

- from 5.6.51 to 5.7.10 iTLB-loads up 2.1X, iTLB-load-misses up 12%

- from 5.7.43 to 8.0.34 iTLB-loads up 82%, iTLB-load-misses up 2.3X

- on ser7

- from 5.6.51 to 5.7.10 iTLB-loads up 2.6X, iTLB-load-misses up 41%

- from 5.7.43 to 8.0.34 iTLB-loads up 29%, iTLB-load-misses up 99%

- on socket2

- from 5.6.51 to 5.7.10 iTLB-loads up 36%, iTLB-load-misses down 24%

- from 5.7.43 to 8.0.34 iTLB-loads up 56%, iTLB-load-misses up 2.2X

- the Results section above explains the y-axis

- on beelink

- dcache

- from 5.6.51 to 5.7.10 loads up 10%, load-misses up 22%

- from 5.7.43 to 8.0.34 loads up 36%, load-misses up 35%

- icache

- from 5.6.51 to 5.7.10 loads-misses down 45%

- from 5.7.43 to 8.0.34 loads-misses up 29%

- on ser7

- dcache

- from 5.6.51 to 5.7.10 loads up 15%, load-misses up 31%

- from 5.7.43 to 8.0.34 loads up 34%, load-misses up 34%

- icache

- from 5.6.51 to 5.7.10 loads-misses up 2.3X

- from 5.7.43 to 8.0.34 loads-misses up 99%

- on socket2

- dcache

- from 5.6.51 to 5.7.10 loads down 3%, load-misses down 11%, stores are flat

- from 5.7.43 to 8.0.34 loads up 40%, load-misses up 21%, stores up 59%

- icache

- from 5.6.51 to 5.7.10 loads-misses up 13%

- from 5.7.43 to 8.0.34 loads-misses up 74%

The LLC counters were only supported in the socket2 CPU.

Summary:

- the Results section above explains the y-axis

- on socket2

- loads

- from 5.6.51 to 5.7.10 loads up 5%, load-misses down 4%

- from 5.7.43 to 8.0.34 loads up 50%, load-misses up 48%

- stores

- from 5.6.51 to 5.7.10 stores down 7%, store-misses down 13%

- from 5.7.43 to 8.0.34 stores up 49%, store-misses up 49%

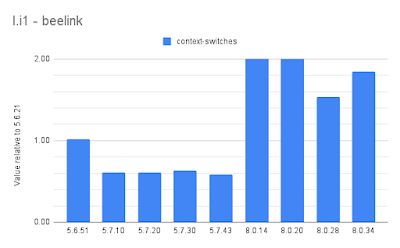

- on beelink

- from 5.6.21 to 5.7.10 context switches down 40%

- from 5.7.43 to 8.0.34 context switches up 3.1X

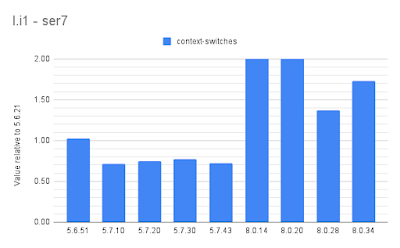

- on ser7

- from 5.6.21 to 5.7.10 context switches down 31%

- from 5.7.43 to 8.0.34 context switches up 2.4X

- on socket2

- from 5.6.21 to 5.7.10 context switches down 44%

- from 5.7.43 to 8.0.34 context switches up 2.6X

- on beelink

- from 5.6.21 to 5.7.10 CPU migrations up 41%

- from 5.7.43 to 8.0.34 CPU migrations up 4.6X

- on ser7

- from 5.6.21 to 5.7.10 CPU migrations up 4.8X

- from 5.7.43 to 8.0.34 CPU migrations up 2.2X

- on socket2

- from 5.6.21 to 5.7.10 CPU migrations down 41%

- from 5.7.43 to 8.0.34 CPU migrations up 6%

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

No comments:

Post a Comment